Data center enclosures designed to beat the heat

A variety of cool design choices can help you keep high temperatures from impacting cables.

An Uptime Institute (www.upsite.com) survey conducted three years ago revealed that the average power dissipating in an enclosure was 1.2 kilowatts (kW). By this year, that dissipation has doubled to a steep increase of 2.4 kW. A fully-loaded blade server enclosure today could reach 18 kW, and Uptime Institute projections see dissipations of 24 kW in power in the near future.

“That’s a real problem,” says Robert Sullivan, senior consultant with The Uptime Institute.

In data center applications, a basic principle is “power in equals heat out.” In other words, every kilowatt of power put into a cabinet is a kilowatt of heat you have to be able to dissipate. The kilowatt levels noted by the Uptime Institute are akin to having small ovens inside of your cabinet. High temperatures affect electronics, and can definitely have an effect on a cabling system.

Turning up the heat

Enclosure manufacturers agree that the challenge seems to get bigger by the day. With the amount of power to be delivered and dissipated having considerably increased from past years, data center managers face the challenge of addressing the amount of cable under the raised floor and behind each cabinet. (Slack cables can be located directly behind the servers and their exhaust.)

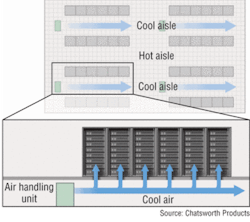

A variety of data center design options, however, can help you beat the heat. For example, cold air can be brought in through a vented tile under the floor in front of a cabinet. Some enclosures are being designed with front-to-rear cooling systems that use fans to pull this cooled air from the front of the enclosure, effectively directing cold air through perforated front doors and exhausting hot air out the back, redirecting it into the air conditioner. This “hot aisle/cold aisle” approach lets you keep the heated air from hitting the cables by routing them around the sides of the cabinet and into a plenum floor or overhead cabling tray.

But the dynamics can be tricky. Cool air must be introduced in a way so that it is focused up toward the cabinet. Airflow paths must come from raised floors in a way that does not release any cool air into the wrong places.

Manufacturers are offering different enclosure designs to aid in the cooling process. Hubbell Premise Wiring (www.hubbell-premise.com) has integrated its cabinets with power and data delivery components that let more you easily coordinate cables in the space behind the cabinet’s servers-minimizing the amount of cable and freeing up space for airflow.

“We eliminated all the cabinet structure and framework behind the servers so that space is wide open and there is no framework behind the servers,” says Robert Baxter, director of datacom marketing for Hubbell Premise Wiring.

The Hubbell design also offers vertical power distribution, with a vertical patch panel running from the cabinet’s back, top to bottom. This lets you run short patch cords from the data port to the server, and it means extra cable does not occupy the space behind the servers, thereby clearing a path for exhaust from the server fans.

Cooler at the top?

While Superior Modular Products (www.superiormod.com) also offers a front-to-rear ventilation style enclosure, it has introduced an enclosure design that takes the “chimney” approach, with ventilation and fans at the top. This design features additional ventilation through mesh doors. “We are driven by what our customers need,” explains Ed Henderson, general manager, Rack Technologies Division, Superior Modular Products.

Rittal Corp. (www.rittal-corp.com), however, believes that the front-to-rear cooling approach is the optimal design for data centers, and its enclosure model uses fully-perforated doors (front and rear) for server installations to support lateral cooling across the servers. The Rittal model features an active ducting scheme to assist airflow and draw air through the cabinet.

But not everyone agrees that there can be a one-size-fits-all approach to data center design and enclosure design. Some argue that, depending on the types of equipment being used in the data center, different installations should call for different data center management strategies. That is, data center and enclosure designs should be tailored individually.

“Anytime you use a term like ‘all,’ that is too general,” says Jim McGlynn, director of engineering for consulting firm Engineering Plus LLC (www.220221.com), and who helps oversee data centers for several clients. “There are different scenarios for different load< applications.”

Rittal counters that cabinets designed for front-to-rear cooling of servers and storage devices are most effective at evacuating heat loads. Herb Villa, Rittal field technical manager, says the company is pushing for a cabinet design that will handle tomorrow’s expected heat loads.

“Moving forward is really what we are trying to get to,” says Villa. “This is not so much for today’s data center, but for what happens tomorrow. As the heat loads increase, will enclosures be able to handle the increased heat densities? The answer is ‘no.’”

Rittal’s position is based on a study it conducted on validating configuration options in air-cooled data centers, where computer room air conditioners are sufficient for heat removal.

In a program that included 24 thermal dissipation tests, Rittal set out to compare industry practices for heat removal. Three enclosure configurations were tested for a minimum of 20 hours in an active data center environment: Server cabinet with fully perforated doors front and rear; equipment cabinet with solid front door and perforated rear door; and equipment cabinet with solid front door, solid or perforated rear door and roof-mounted exhaust fans.

Results revealed that server enclosures with perforated doors, and thus front-to-rear cooling, performed best, with exhaust temperatures consistently within 10° F (5.5° C) of server inlet temperatures.

Rittal claims this front-to-rear design works best in any data center because, as Villa explains, aside from size, most data centers can be easily seen as sharing a common set of components and adhering to the same installation standards.

“More of them are willing to go to a more consistent installation, and we are seeing the hot aisle/cold aisle as the de facto standard,” Villa says. “We see underfloor airflow paths running perpendicular to cabinet rows, and we are seeing minimal usage of the underfloor spaces for cable.”

The half-full argument

The Uptime Institute counters that, ironically, if there is a common thread between today’s data centers, it’s that many of them have room to spare, and many of the enclosures are only half full. In addition, the Institute says, the enclosure and A/C unit layout vary significantly from computer room to computer room.

In fact, McGlynn says enterprise end users are using many different types of designs. For instance, raised-floor depths can vary from one center to another, he asserts. In a high-density data center that does not provide enough air, data center managers are forced to deepen, say, a 24-inch raised floor to 36 inches deep.

Such a move can allow for up to 5 to 6 kW per cabinet without leading to heat load problems. Supplemental overhead “ducted” cooling solutions are then added to accommodate the newer load densities of 12 to 24 kW per cabinet.

In other centers, McGlynn says data center managers may be forced to find alternate or supplemental cooling measures, such as adding an overhead cooling system. The point being: Data centers differ from one to the other, and so do the cooling strategies that will work well with them.

McGlynn is not alone in this assertion. Brad Everette, director of marketing for Superior Modular Products, says one need only look around at data centers being used by enterprise end users to see the differences.

“They are not all the same,” says Everette. “There are a lot of data centers out there that are on concrete slabs rather than a raised floor.”

Sullivan also says many data centers are unique, with some having greater demand for raised-floor space and higher-cabinet density, while others may require fewer cabinets that are spread out with ample space between them. Some, too, require higher-density cabinets than others.

Sullivan says other data centers have more difficult obstacles to overcome. For example, a low raised-floor height-such as a 12-inch raised floor-compared to a typical 24-to 30-inch raised floor. Others may have lower-ceiling heights that provide less than 3 feet of space between the tops of enclosures and the dropped ceiling. Some feature misplaced cooling units, while others have uniformly-distributed cooling around all four walls. And some data centers feature non-uniformally distributed heat loads.

“I’m in 25 or better computer rooms per year, and very few of them are the same,” sums Sullivan.

Some practical steps

These arguments beg the question: What role does the data center manager have to play in enclosure layout and design?

A few logical and simple steps include:

• Make efficient use of floor space, despite the style of enclosures you are working with. This means that if you’ve got the room, you need to spread out the enclosures in it.

• Manage available power, circuit utilization and cooling capacity by area of the room.

• Employ a hot aisle/cold aisle layout, and then manage and monitor it.

• Make sure that cooling air currents are focused on the specific area of heat-generating components while you utilize the ambient temperature for patch fields and other passive devices.

“Like any other component of a data center, it’s about control,” says Everette. “In this case, controlling the cooling function given your individual situation.”

Dennis VanLith, product manager for enclosed systems, Chatsworth Products (www.chatsworth.com), says data center managers should distribute the servers across the data center so that not all heat loads are in one area. This means working with a facilities manager to effectively deliver cooled air to cabinets.

“If you put too much cold air on one, you will rob the other of the cold air it needs,” says VanLith.

Henderson believes that, most importantly, you need to consider the future of the data centers in which you are working: “(You need to ask) ‘how much do you plan to grow and how much do you plan to expand the facility?’ The manager needs to be a visionary in all of this, and see what the extent of the problem is so they can address that.”

Instead of a one-size-fits-all approach, Everette says, make sure that all important functions in the data center-including fire suppression, data cabling, security, airflow and cooling-complement each other.

BRIAN MILLIGAN is senior editor for Cabling Installation & Maintenance.

Enclosure manufacturers

The following companies manufacture enclosures, many of which employ airflow schemes and other methods of dissipating heat generated from networking equipment. The list comes from the 2005 Cabling Installation & Maintenance Buyer’s Guide. Contact information is provided here so you can obtain more detailed information on any company and its products.

AFL Telecommunications

Spartanburg, SC

864-433-0333

www.afltele.com

AMCO Engineering Co.

Schiller Park, IL

847-671-6670

www.amcoengineering.com

APW Enclosure Products

Mayville, WI

800-558-7297

www.apw-enclosures.com

AWC/US Fiber Optics

Woonsocket, RI

401-769-1600

www.awcacs.com

Black Box Network Services

Lawrence, PA

724-746-5500

www.blackbox.com

Cables To Go

Dayton, OH

937-224-8646

www.cablestogo.com

Carlon

Cleveland, OH

800-322-7566

www.carlon.com

Champion Computer Technologies

Beachwood, OH

216-831-1800

www.cctupgrades.com

Chatsworth Products Inc.

Westlake Village, CA

800-834-4969

www.chatsworth.com

CMP Enclosures

Gurnee, IL

847-244-3230

www.enclosures.com

CommScope

Claremont, NC

800-544-1948

www.commscope.com

Connectivity Technologies

Carrollton, TX

888-446-9175

www.contech1.com

DAMAC Products Inc.

La Mirada, CA

714-228-2900

www.damac.com

Data Connections Inc.

Greensboro, NC

336-854-4053

www.dataconnectionsinc.com

Electrorack Enclosure Products

Anaheim, CA

714-776-5420

www.electrorack.com

Fiber Connections Inc.

Schomberg, Ontario, Canada

800-353-1127

www.fiberc.com

Fiber Optic Network Solutions Corp.

Marlboro, MA

508-303-9352

www.fons.com

Great Lakes Case & Cabinet Co. Inc.

Edinboro, PA

814-734-7303

www.werackyourworld.com

Hendry Telephone Products

Santa Barbara, CA

805-968-5511

www.hendry.com

Hewlett-Packard Co.

Houston, TX

281-370-0670

www.hp.com/products

Hoffman

Anoka, MN

763-421-2240

www.hoffmanonline.com

Homaco

Chicago, IL

312-422-0051

www.homaco.com

Hubbell Premise Wiring

Stonington, CT

860-535-8326

www.hubbell-premise.com

Hyperlink Technologies Inc.

Boca Raton, FL

561-995-2256

www.hyperlinktech.com

ICC

Cerritos, CA

562-356-3111

www.icc.com

InnoData Ltd.

Casa Grande, AZ

888-334-3930

www.ezmt.com

Knurr

Simi Valley, CA

805-915-3700

www.knurr.com

Leviton Manufacturing Co.

Bothell, WA

425-415-7611

www.levitonvoicedata.com

Lowell Manufacturing Co.

Pacific, MO

636-257-3400

www.lowellmfg.com

Middle Atlantic Products

Riverdale, NJ

973-839-1011

www.middleatlantic.com

Ortronics Inc.

New London, CT

860-445-3900

www.ortronics.com

Panduit Corp.

Tinley Park, IL

800-777-3300

www.panduit.com

Polygon Wire Management Inc.

Port Coquitlam, BC, Canada

604-941-9961

www.softcinch.com

Premier Metal Products

Norwood, NY

201-750-4900

www.premiermetal.com

Rittal Corp.

Springfield, OH

937-399-0500

www.rittal-corp.com

The Siemon Co.

Watertown, CT

860-945-4200

www.siemon.com

Sumitomo Electric Lightwave

Research Triangle Park, NC

800-358-7378

www.sumitomoelectric.com

Superior Modular Products

Swannanoa, NC

800-880-7674

www.superiormod.com

Systimax Solutions

Richardson, TX

800-344-0223

www.systimax.com

Tripp Lite

Chicago, IL

773-869-1111

www.tripplite.com

Unicom

City of Industry, CA

626-964-7873

www.unicomlink.com

The Wiremold Co.

West Hartford, CT

800-621-0049

www.wiremold.com

Wright Line LLC

Worcester, MA

800-225-7348

www.wrightline.com

X-Mark

Washington, PA

800-793-2954

www.metalenclosures.com