Performance, workmanship play key roles in data centers

By Patrick McLaughlin

While cabling is just a piece of the large data center puzzle, it is an important piece nonetheless.

The article that last February kicked off this magazine’s year-long study of data center facilities (“Cabling in the data center: Just a piece of the puzzle,” January 2006, p. 37) took a wholesale look at the forces driving the data center market. And as the article’s title indicates, cabling is one part of a series of complex information-technology systems that make up a data center.

While the cabling system is just one consideration in such an environment, it does have the opportunity to positively or negatively affect a data center’s efficiency.

The obvious manner in which cabling affects data center administration is the systems’ performance. A cabling-induced downtime episode would be disastrous for a data center manager, and probably also for the manufacturer whose system failed. Those systems must be robust because today’s data centers are upgrading to the highest available speeds.

“Everything that is going in new is 10-Gig,” says Carrie Higbie, global network applications market manager with Siemon (www.siemon.com). That comment can have two meanings. From a network-hardware standpoint, all commercially available 10-Gbit/sec gear is optical. Copper-based 10-Gigabit Ethernet (10-GbE) equipment is in many vendors’ development labs, but everything that has gone through the distribution pipeline requires fiber-optic infrastructure at the physical layer. So, the 10-Gig networking equipment being installed in data centers today is all-optical.

When it comes to structured cabling systems capable of supporting 10-Gbit/sec transmission, users have optical and copper options from which to choose. Although copper-based network equipment has not yet hit the market, the structured cabling systems engineered to support that data rate have been available for more than two years. In fact, under certain circumstances and to certain short distances sometimes found in data centers, legacy Category 6 systems will support 10-GbE.

Nonetheless, Higbie’s comment about new data-center installations being 10-Gig capable refers to high-performing multimode fiber-optic and pre-standard Augmented Category 6 copper cabling systems. Suffice it to say, data centers require and are receiving the highest-performing structured cabling products available.

Workmanship affects performance

In addition to the logical importance of a cabling system’s throughput capacity, workmanship issues in the design, installation, and maintenance of these systems can have long-term effects on overall data center operation. Higbie recalls some maintenance issues from her recent trips through data centers: “In many data centers, the cabling is not a planned system. They first installed some cables because they needed a server and needed connectivity to that server. Plenty of data centers have cables clothes-lined across the room. In cases like these, tracing the cables is very difficult.”

Higbie says the problem appears to be universal, as she has visited data centers in several continents-and those centerswith cable-management problems exhibit similar characteristics.

These management problems can create performance problems, as she points out while referencing some older circuits. “A lot of Category 5e cabling won’t pass 5e testing, either because it was pre-standard product that never met the final specifications or, in many cases, because improper management during moves/adds/changes have compromised their performance,” Higbie says. “Customers in this situation who are trying to run 1-Gig on that cable have to decide whether they want to re-terminate the cable that is in there, or install a new system.”

Management, as Higbie mentioned earlier, becomes a significant challenge. “The network must be documented,” she says. “Otherwise, seeing what is already there is difficult.” Not to mention, she adds, the National Electrical Code requirement to remove cabling that is deemed abandoned when any new project begins. “All kinds of cables, stuck in a rat’s nest, have to be removed.” Higbie has seen some data center managers literally give up on deciphering the tangled mess of existing cabling and find other routes for new installations. “Some who had underfloor systems are building overhead, and vice versa,” she notes.

Dense bundles

The sheer density of cables in many data centers creates a challenge even with reasonable cable-management measures, as ADC’s (www.adc.com) senior product manager for structured cabling, John Schmidt, explains: “Managers are struggling because many data centers were designed for the technologies of years ago with far less density than we see today. Proper cable management and routing are essential, and have an impact on cooling and thermal management.”

Schmidt says his company promotes its cables for use in the data center because of their small diameter, meaning a bundle occupies less space than other cables would and, therefore, does not restrict as much airflow.

The bottom line for handling cables can be summed up in a single word: organize. “Simply do not let them hang in airflow,” Schmidt says.

“Structured cabling could have an impact on airflow, especially in the perimeter of racks,” explains Zurica D’Souza, product information manager, data center infrastructure systems with APCC (www.apcc.com). The number of cables per device is the root of this air-flow restriction, she notes, echoing Schmidt’s comment about today’s high-density devices. “If a customer is deploying high density, they must be aware that their system could impede airflow. A key to battling this is to give as much space in racks as possible.”

Schmidt adds that cable management can be an issue on both “sides” of the data center-the local area network (LAN) and storage area network (SAN) sides. “Cable management is equally important in the LAN and the SAN, but there is a distinct difference in SANs,” he points out. It is not uncommon for even a moderate SAN to have terabytes of storage space. Because SANs almost universally operate using the Fibre Channel protocol, fiber connections are ubiquitous.

“The question becomes, ‘How do you manage all the fiber coming in,’” Schmidt notes. He further explains that SAN networking equipment often does not make things any easier for cable management. “The disk arrays from SAN manufacturers do not have management built in. Cable management certainly is a challenge. Often the main problem in a SAN has been a neglected plant. Some users have many loose fiber cables, completely unmanaged.”

That challenge stands somewhat in contrast to the situation in data center LANs, at least from a workmanship standpoint. “On the LAN or Ethernet side, people generally are more familiar with cable management,” says Schmidt. Cabling-system installers in particular, thanks to their experience in commercial building environments, are familiar with and have implemented best practices for cable management.

As a result, the idea of true “structure” to the cabling in a data center frequently resides on the LAN rather than the SAN side. Schmidt says the situation varies from site to site, but in general terms, that statement often holds true: “Predominantly, the structured cabling group or professionals are responsible for the LAN side. On the SAN side, most often, the installation professionals are integrators, who deal with active equipment.”

For them, cabling takes a rather low place on the totem pole-it’s simply a means of connecting the more-important pieces of storage equipment-and quite often, that is reflected in the extent to which its cable management is a consideration.

Standard guidance

With these structured-cabling issues present in data centers, a logical question is whether system designers, installers, and managers can seek guidance or assistance in any formal standards that address cabling specifically in a data center. The answer comes in the form of the TIA-942 Telecommunications Infrastructure Standard for Data Centers. Published in April 2005, the standard provides information not only on structured cabling but also on the data center overall.

“This standard is different from other TIA cabling standards because it has everything in one,” says Julie Roy, telecommunications cabling consultant and principal of C2 Consulting (www.csquaredconsulting.biz), who served as editor of the TIA-942 standard. “An Informative Annex talks about architectural, electrical, and mechanical considerations; the standard provides data center information as well as just cabling information.

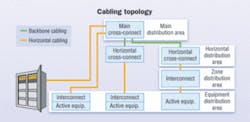

“For those concerned only with cabling, you really have to have a panoramic view of the data center to do your part correctly,” Roy adds. She says the standard was developed with several purposes in mind, including encouraging early participation of telecommunications designers in the data center design process, and providing specifications for data center telecommunications cabling, pathways, and spaces. The standard accommodates a wide range of applications, including LANs, SANs, and WANs (wide area networks); it also accommodates current and known future protocols. Importantly, especially considering some of the cable-management challenges discussed earlier, TIA-942 replaces unstructured point-to-point cabling that uses different cabling for different applications.

Among the overall data center information in the standard is the concept of tiering, under which data centers are categorized as being anywhere between Tier 1 and Tier 4, depending on a number of use factors. That tiering structure was adopted from The Uptime Institute (www.upsite.com), which originally developed the concept. The standard also includes a checklist to aid planners in determining which Tier a particular data center will fall within. “That information allows a designer to communicate with other trades in the data center,” Roy points out.

While TIA-942 does not specifically spell out differences between privately owned and collocated data centers, Roy says that, in most cases, the main difference between the two is size: “The standard does take into consideration the data center’s size, including a topology for what is called a ‘reduced’ data center and what is called a ‘distributed’ data center.”

The differences will have an impact on access control, redundancy, and heating/ventilation/air-conditioning requirements, she notes. “All data centers have these needs, so in one sense their needs are the same,” Roy adds. “The difference is in the quantities or extents of those needs.”

The goal of TIA-942’s creators to provide information on cabling and pathways while also providing the larger perspective of the data center as a whole, reflects the reality that structured cabling is a small but important part of a data center.

While its importance should not be overstated, neither should it be understated.PATRICK McLAUGHLIN is chief editor of Cabling Installation & Maintenance.