Hot-air isolation cools high-density data centers

Methods for isolating the chilled supply air from the hot return air are plenty, and worthy of close examination.

by Ian Seaton

The first paragraph of an article about cooling data centers will give away that article’s ageWhen I first started writing and speaking about data center thermal management some seven or eight years ago, I always felt compelled to open with a review of the heat-density forecasts from the American Society of Heating, Refrigeration and Air-conditioning Engineers (ASHRAE; www.ashrae.org) and the Uptime Institute (www.upsite.com) with the motive of scaring the reader or listener into staying with me to the end. Such rhetorical ploys are no longer necessary, so instead I can get right to the point:

Contrary to some well-intentioned and self-serving sound and fury, air is quite capable of dissipating heat loads in excess of 30 kilowatts (kW) per rack, and doing it quite economically. That will be good news to the 92% of data center managers who have not yet tried liquid cooling, and the 65% who said they would never use liquid cooling in their data centers, according to research presented in “Data Center Infrastructure: Too Soon for Liquid Cooling,” (www.searchdatacenter.com, July 13, 2007).

The key principle in effectively using air to cool higher heat densities is to achieve as complete isolation as possible between the chilled supply air and the heated return air. This guiding principle is the reason for laying out a data center in hot aisles and cold aisles, is supported by all reputable standards, and is cited as best practice in recent studies by the Lawrence Berkeley National Laboratory (www.lbl.gov) and Intel (www.intel.com).

Current practices

The current state of the art has seen isolation evolve from relative isolation to essentially complete isolation. This has been accomplished by various practices, including:

- Cabinets with ducted exhausts directing heated air into a suspended ceiling return plenum;

- Cabinets direct-ducted back to the cooling unit;

- Enclosed hot aisles with exhaust air ducted out of the data center space (direct-ducted to the AC, ducted into a suspended ceiling return plenum or ducted out of the building);

- The partial, although more complete than legacy, hot-aisle/cold-aisle. In this method isolation is accomplished by evacuating hot aisles into a suspended ceiling return plenum through ceiling grates, or routing exhaust air through cabinet chimney ducts into the space above the equipment in a room with much higher than normal slab-to-slab height.

In both of these latter partial-isolation strategies, the effectiveness of the isolation can be improved on an access floor by extending the perforated tiles beyond the end of the row of cabinets to help block return air recirculation around the ends of the rows.

The cabinets in these isolation topologies actually serve as the barrier in the room that secures the separation between supply air and return air and, therefore, need to include all elements of best practices, such as blanking panels in all unused rack-mount spaces, means of sealing off recirculation around the perimeters of the equipment mounting area, and a means of sealing off cable access-tile cutouts.

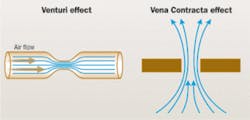

Fans are sometimes used to route the return air through and out of this barrier system, but their use can be avoided-along with the extra power consumption, heat, noise, and potential failure points-by engineering spaces that take advantage of the basic physics of the Bernoulli Equation. In particular, the Venturi effect can create high-velocity, low-pressure areas in the rear of the cabinet and the Vena Contracta effect can produce low-pressure areas at the apex of exhaust ducts. Both of these “effects” can, therefore, work together to effectively remove heat from cabinets without additional fans.

Theory and practice

High densities can be achieved with this degree of isolation because it frees the individual cabinet heat load from its dependency relationship on the delivery of chilled air from an immediately adjacent perforated floor tile. The basis of the conventional wisdom about the limits to air cooling (typically, in the range of 6 to 8 kW per cabinet) resides in the relationship among airflow, temperature-rise, and heat dissipation.

This relationship can be described by the equation CFM = 3.1W/ΔT, where CFM is the cumulative air consumption of the equipment in the cabinet, W is the heat load of the cabinet in watts, and ΔT is the difference between the equipment intake temperature and its exhaust temperature.

Because there is some fixed limit on how much air can be pushed through a perforated floor tile or tile grate, the CFM part of this relationship defines the ceiling on air cooling. For example, if we were able to get 800 CFM through a tile grate and we had equipment in the cabinet that operated with a 20°F temperature rise, we could figure we could cool about 5.2 kW in that cabinet:

800 CFM = 3.1W/20°FΔT

W = 5161.3

But by removing the return air from the room, that dependency on chilled air being delivered from a near-enough source to diminish the effects of mixing with recirculated return air is broken. The delivery points for the chilled air no longer matter; chilled air can enter the room anywhere, and as long as the room remains pressurized, there will be, by definition, adequate air volume in the room to cool any heat load any place in the room.

Elevated supply-air temperatures

This isolation between supply air and return air also means that supply-air temperatures can be run significantly higher. Typically, supply air is set around 55°F in order to meet the ASHRAE TC9.9 recommendation for air delivered to equipment to fall in the 68 to 77°F range. The lower supply air temperature is required to compensate for the natural mixing that takes place in the data center between supply air and recirculated return air, and typically results in significant temperature stratification from equipment lower in the cabinet to equipment higher in the cabinet.

Without any return air in the room, there is no longer any such stratification and, therefore, no longer a need to deliver supply air below the required temperature for the equipment. In a room with such isolation, it is safe to raise that supply air up to the 68 to 77°F range.

Higher supply-air temperatures, in conjunction with cooling unit economizers, offer a significant economic benefit for the data center operator. Historically, the return-on-investment for water side economizers for data center applications has been a hard sell in most geographic areas. Chiller plants typically need to keep water at 42°F to deliver 55°F supply air and assuming a 5°F approach temperature, the economizer would only take over for the chiller plant when the temperature dropped below 37°F.

With the supply air temperature allowed to creep into the 70s°F, however, then the condenser water can run around 55°F and with a 5°F approach temperature; the economizer can kick in whenever the temperature drops below 50°F. At that temperature, data center operators in most areas of the country would have access to significantly more “free” days of cooling. In fact, Intel reported a $144,000 energy savings for a medium-sized data center in the Pacific Northwest that depended first on the effective return-air isolation to allow higher supply-air temperature. (Reference: “Reducing Data Center Energy Consumption with Wet Side Economizers,” Intel white paper authored by Doug Garday, May 2007.)

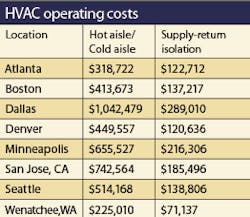

Additionally, McKinstry Co. (www.mckinstry.com) has analyzed the economic benefits of deploying chilled-water air handlers with evaporative air economizers and absolute separation of supply air and return air in the data center. Five-megawatt data center tenants, in eight different geographic areas, found significant cooling-cost savings versus a benchmark facility with standard open hot aisles and cold aisles, using chilled-water computer-room air-conditioning (CRAC) units with water economizers.

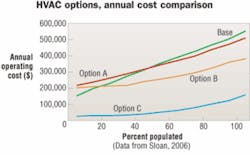

The McKinstry study also looked at various options for improving the total efficiency of the standard data center open hot-aisle/cold-aisle arrangement compared to the fully isolated arrangement at various levels of loading, and found the segregated supply-air and return-air models delivered lower operating costs than all other combinations.

While the variations between regions can be extreme, the chart of average HVAC operating costs (page 48) nevertheless provides an accurate view of the relative operating economies of the different systems. The “base” data center employs chilled-water CRAC units with water side economizer, with the room arranged according to hot-aisle/cold-aisle best practices. Option A employs chilled-water air handlers with air side economizer and stream humidifier, again with hot-aisle/cold-aisle best practices. Option B employs chilled-water air handlers with evaporative air economizer and hot-aisle/cold-aisle best practices. Option C employs the same cooling plant as Option B, but assumes absolute separation between the supply air and return air.

Isolation versus other methods

While the evidence clearly favors the model of fully isolating supply air from return air over standard hot-aisle/cold-aisle arrangements with open space return-air paths for both lower operating costs and higher densities, there are also some implications regarding the claims of proponents of closely coupled liquid-cooled solutions.

For example, it is claimed that closely coupled liquid-cooled solutions are more economical to implement incrementally, thus saving the cost of operating a full-blown data center cooling plant when a room is not fully built out. The McKinstry Co. data suggest otherwise, however, with the extremely low operating costs for Option C when the space is less than 50% populated.

In addition, lower operating costs in general have been central to the value proposition offered for closely coupled liquid-cooled solutions, particularly as a rebuttal to objections over their significantly higher acquisition costs. In fact, the keynote address at this past spring’s AFCOM Data Center World (www.afcom.com), delivered by HP’s (www.hp.com) Christian Belady, explained the power usage effectiveness (PUE) metric for measuring data center power and cooling efficiency (total data center power consumption divided by IT power consumption); it suggested a PUE of 1.6 was a measure of efficiency toward which the industry could aspire with closely coupled liquid cooling solutions.

Compare this to today’s typical data center with a PUE around 3.0 and perhaps a 2.4, which can be achieved through implementing best practices in the hot aisle/cold-aisle arrangements, or maybe even a computational fluid dynamics (CFD)-enabled 2.0 PUE. The previously cited Intel white paper on wet side economizers uses the HVAC effectiveness ratio as a metric to demonstrate the efficiency of their solution model, but that 6.19 ratio on an IT load of 5,830 kW equated to a PUE of approximately 1.3.

In addition, the eight data center models in the McKinstry study showed cooling-operating costs (HVAC + electricity + water + maintenance) ranging from only 5.1% up to 9.1% of the total data center operating costs (IT + UPS + transformers). Proponents of close-coupled cooling solutions also claim to make more floor space available for IT; however, the McKinstry study shows an average of less than 2% of the data center floor space is taken up by indoor HVAC equipment. Therefore, with recognized higher acquisition costs and apparently without the touted operating cost advantages, close-coupled solutions do not appear to offer the user benefits that overcome redundancy and fail-over concerns.

Finally, while the message is clear regarding the operational advantages of air cooling where maximum isolation is maintained between supply air and return air, particularly in conjunction with centralized chilled-water air handlers and evaporative economizers, the data center operator can still reap benefits in a space equipped with conventional chilled-water CRAC units and water side economizers.

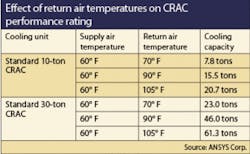

The primary benefit derives from increased cooling-unit efficiency because of higher return-air temperatures resulting from eliminating the cooling effect of mixing with bypass air in the data center. These efficiency gains can be quite remarkable (see table at left). Employing the strategy of isolating supply air from return air can provide an economical path for making incremental density increases.

A paradigm-shifting design

I don’t want to leave you underestimating the complexity involved with implementing a well-engineered air-cooled data center with effective isolation between cool supply air and heated return air. Building this style of data center, however, is not really any more complex than any data center design and construction project, whether it be a conventional hot-aisle/cold-aisle arrangement or a close-coupled liquid-cooled variation. It just requires making a bit of a paradigm shift up front; you will find that most HVAC engineers will understand these values quite clearly.

The rewards are higher operating efficiencies, lower energy costs, and the peace of mind that comes with knowing you have created an environment that minimizes the kinds of complexity that jeopardizes uptime availability.IAN SEATON is technology marketing manager for Chatsworth Products Inc. (www.chatsworth.com).