A 24-fiber interconnect solution: The right migration path to 40/100G

From the September, 2012 Issue of Cabling Installation & Maintenance Magazine

Maximum fiber use, reduced cable congestion and increased fiber density make a 24-fiber trunking and interconnect a preferred option to prepare for next-generation speeds.

By Kam Patel, TE Connectivity

Video views on YouTube climbed from 100 million per day in 2006 to well over 4 billion per day in 2012. Song downloads from iTunes increased from 5 billion in 2008 to more than 16 billion by 2012. According to Cisco’s Visual Networking Index Global Mobile Data Traffic Forecast Update 2011-2016, average smartphone use tripled in 2011 and forecasts estimate that by the end of 2012, the number of mobile-connected devices will exceed the number of people on earth. Over the next two years, our world will create, process and store more data than in the entire history of mankind.

In conjunction with the media demands of today’s Internet and the mobile network boom, the amount of data transmitted at the enterprise business level is also rapidly climbing as networks need to support more devices and advanced applications than ever before. The Cisco Visual Networking Index forecasts that business data traffic will grow 22 percent between 2011 and 2016, with business videoconferencing alone increasing sixfold. In fact, it is estimated that by 2016, the gigabyte equivalent of all the movies ever made will cross global IP-based networks every three minutes, with North America accounting for 3.3 exabytes per month—or more than 3 billion gigabytes.

Data centers are at the heart of the tremendous amount of business data needing to be transmitted, processed and stored. In the data center, fiber-optic links are vital for providing the bandwidth and speed needed to transmit huge amounts of data to and from a large number of sources. More bandwidth is also needed to support the use of virtualization that consolidates multiple computer platforms onto single physical servers to reduce the amount of equipment and improve space utilization. As data center managers strive to provide the bandwidth they need, transmission speeds at core switches are increasing and backbone infrastructures are experiencing a significant upsurge in the amount of fiber-optic cabling. Typical transmission speeds in the data center are beginning to increase beyond 10 Gbits/sec. In 2010 the Institute of Electrical and Electronics Engineers (IEEE) ratified the 40- and 100-Gbit Ethernet standard, and already leading switch manufacturers are offering 40-GbE blades and more than 25 percent of data centers have implemented these next-generation speeds. It is anticipated that by the end of 2013, nearly half of all data centers will follow suit. Today’s enterprise businesses are therefore seeking the most effective method to migrate from current 10-GbE data center applications to 40/100-GbE in the near future.

A 24-fiber data center fiber trunking and interconnect solution allows enterprise data center managers to effectively migrate from 10-GbE to 40/100-GbE. By leveraging 24-fiber trunk cable technology, such a system offers the right 10-40-100-GbE migration path with the following characteristics.

- Support for 10-, 40- and 100-GbE

- Maximum use of deployed fibers

- Space savings and reduced congestion

- Better airflow and energy efficiency than other cabling options

- Increased density in fiber panels

- An easy, cost-effective migration scheme

- Overall better return on investment

What standards say

In 2002, the IEEE ratified the 802.3ae standard for 10-GbE over fiber using duplex-fiber links and vertical-cavity surface-emitting laser (VCSEL) transceivers. Most 10-GbE applications use duplex LC-style connectors; in these setups, one fiber transmits and the other receives. Standards efforts aimed at finding a cost-effective method to support next-generation speeds of 40- and 100-Gbits/sec, and in 2010, the IEEE ratified the 802.3ba standard for 40- and 100-GbE. Similar to how transportation highways are scaled to support increased traffic with multiple lanes at the same speed, the 40- and 100-GbE standards use parallel optics, or multiple lanes of fiber transmitting at the same speed. Running 40-GbE requires 8 fibers, with 4 fibers each transmitting at 10-Gbits/sec and 4 fibers each receiving at 10-Gbits/sec. Running 100-GbE requires a total of 20 fibers, with 10 transmitting at 10-Gbits/sec and 10 receiving at 10-Gbits/sec. Both scenarios call for using high-density multi-fiber MPO-style connectors.

For 40-GbE, a 12-fiber MPO connector is used. Because only 8 optical fibers are required, typical 40-GbE applications use only the 4 left and 4 right optical fibers of the 12-fiber MPO connector, while the inner 4 optical fibers are left unused.

To run 100-GbE, two 12-fiber MPO connectors can be used—one transmitting 10-Gbits/sec on 10 fibers and the other receiving 10-Gbits/sec on 10 fibers. However, the recommended method for 100-GbE is to use a 24-fiber MPO-style connector with the 20 fibers in the middle of the connector transmitting and receiving at 10-Gbits/sec and the 2 top and bottom fibers on the left and right unused.

To keep costs down, the objective of the IEEE was to leverage existing 10-GbE VCSELs and Om3/Om4 multimode fiber. The standards therefore relaxed transceiver requirements, allowing both 40- and 100-GbE to use arrayed transceivers containing either 4 or 10 VCSELs and detectors, accordingly. This prevented the cost of 40-GbE transceivers being 4 times that of existing 10-GbE transceivers for 40-GbE, or 10 times the cost of existing 10-GbE transceivers for 100-GbE. According to the IEEE 802.3ba standard, multimode optical fiber supports both 40- and 100-GbE over link lengths up to 150 meters when using Om4 optical fiber and up to 100 meters when using Om3 optical fiber.

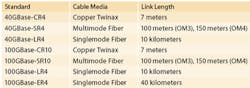

It is important to note that singlemode fiber can also be used for running 40- and 100-GbE to much greater distances using wavelength division multiplexing (WDM). While this is ideal for longer-reach applications like long campus backbones, metropolitan area networks and other long-haul applications, the finer tolerances of singlemode fiber components and optoelectronics used for sending and receiving over singlemode are much more expensive and are therefore not feasible for most data center applications of less than 150 meters. Copper twinax cable is also capable of supporting 40- and 100-GbE but only to distances of 7 meters.

According to a data center study conducted by Building Services Research and Information Association (BSRIA; www.bsria.co.uk), which surveyed 335 respondents in 6 countries, 29 percent of respondents plan to use Om3/Om4 multimode fiber for 40-GbE and 41 percent plan to use Om3/Om4 for 100-GbE. On the other hand, only 11 percent plan to use copper twinax for both 40- and 100-GbE applications. The table (p. 14) shows the 40- and 100-GbE standards with their associated cabling media and link lengths.

Why 24 is the right migration path

Knowing that 40- and 100-GbE are just around the corner, and already a reality for some, many data center managers are striving to determine which physical-layer solution will support 10-GbE today while providing the best, most-effective migration path to 40- and 100-GbE. While many solutions on the market recommend the use of 12-fiber multimode trunk cables between core switches and the equipment distribution area in the data center, TE Connectivity recommends and offers a standards-based migration path with the use of 24-fiber trunk cables.

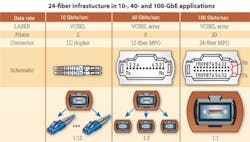

The use of 24-fiber trunk cables between switch panels and equipment is a common-sense method. In this scenario, 24-fiber trunk cables with 24-fiber MPOs on both ends are used to connect from the back of the switch panel to the equipment distribution area. For 10-GbE applications, each of the 24 fibers can be used to transmit 10 Gbits/sec, for a total of 12 links. For 40-GbE applications, which requires 8 fibers (4 transmitting and 4 receiving), a 24-fiber trunk cable provides a total of three 40-GbE links. For 100-GbE, which requires 20 fibers (10 transmitting and 10 receiving), a 24-fiber trunk cable provides a single 100-GbE link. Why is this more advantageous than using 12-fiber trunk cables? It all comes down to a better return on investment and reduced future operating and capital expense.

Maximum fiber use. As mentioned previously, 40-GbE uses 8 fibers of a 12-fiber MPO, leaving 4 fibers unused. When using a 12-fiber trunk cable, those same 4 fibers are unused. For example, three 40-GbE links using three separate 12-fiber trunk cables would result in a total of 12 unused fibers, or 4 fibers unused for each trunk.

With the use of 24-fiber trunk cables, data center managers actually get to use all the fiber and leverage their complete investment. Running three, 40-GbE links over a single 24-fiber trunk cable uses all 24 fibers of the trunk cable. This recoups 33 percent of the fibers that would be lost with 12-fiber trunk cables, providing a much better return on investment. At 100-GbE, which requires 20 fibers, a total of 4 fibers are left unused when using either two, 12-riber trunk cables or when using a single 24-fiber trunk cable. However, additional benefits come into play for 100-GbE, and 12-fiber trunk cables are not the recommended configuration for 100-GbE.

Reduced cable congestion. Another benefit to using 24-fiber trunk cables is less cable congestion in already-crowded pathways. Space is premium in the data center, and congested cable pathways can make cable management more difficult and impede proper airflow needed to maintain efficient cooling and subsequent energy efficiency.

Our 24-fiber trunk cables are only appreciably larger than 12-fiber trunk cables at 3.8 mm in diameter, compared to 3 mm. That means that 24-fiber trunk cables provide twice the amount of fiber in less than 21 percent more space. For a 40-GbE application, it takes three, 12-fiber trunk cables to provide the same number of links as a single 24-fiber trunk cable—or about 1.5 times more pathway space.

Increased fiber density. Because 24-fiber MPO connectors offer a small footprint, they can ultimately provide increased density in fiber panels at the switch location. With today’s large core switches occupying upwards of one-third of an entire rack, density in fiber switch panels is critical.

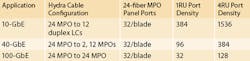

Our data center fiber trunking and interconnect solution consists of the 24-fiber trunk cables from the back of the fiber panels to the equipment distribution area. With the rack or cabinet, hydra cables plug into the 24-fiber MPO ports on the front of the fiber panel and connect to individual switch ports. Hydra cables feature a single 24-fiber MPO connector on one end and either 12 duplex LC connectors on the other end for 10-GbE applications, 12-fiber MPO connectors for 40-GbE, or a 24-fiber MPO connector for 100-GbE. With a single 1-RU fiber panel able to provide a total of 32 MPO adapter panels, the density for 10-GbE applications is 384 ports in 1 RU (duplex LC connectors) and 96 40-GbE ports in 1 RU (12-fiber MPOs). The table (p. 16) demonstrates the various densities the system offers.

Easier, cost-effective migration path. The 24-fiber data center fiber trunking and interconnect solution offers a simple and cost-effective migration path from 10-GbE to 40- and 100-GbE, providing future-readiness for three generations of active equipment. With 24-fiber trunk cables effectively supporting all three applications, there is no need to recable the pathways from the back of the switch panel to the equipment distribution area; all that cabling remains permanent and never has to be touched.

That means that data center managers can easily migrate to higher speeds, with less time and complexity. With 24-fiber trunk cables that offer guaranteed performance for 10-, 40- and 100-GbE, upgrading the cabling infrastructure is as simple as upgrading the hydra cables or cassettes and patch cords to the equipment.

Improving ROI

The 24-fiber data center fiber trunking and interconnect solution is ideal for medium- to large-size data center customers and markets, from healthcare and finance to broadcasting and government—essentially anyone that foresees the need to update from 10- to 40/100-GbE in the future. With guaranteed support for all three applications, the ability to use all the fiber deployed, reduced cable congestion and better airflow, higher port densities in fiber panels and an easy migration scheme, the data center fiber trunking and interconnect solution with 24-fiber trunk cables offers lower future capital and operating expense.

Unlike other solutions on the market that leave one-third of the fiber investment stranded, impede airflow with overcongested pathways, and provide inadequate port densities, a 24-fiber data center trunking and interconnect solution helps data center managers effectively and efficiently support today’s high-speed requirements. With permanent 24-fiber trunk cables that eliminate the need for complete and complex reconfigurations all the way from the switch to the equipment, the solution offers an easy, cost-effective method for upgrading from 10-GbE to 40- and 100-GbE.

As the amount of data being created, processed and stored reaches an all-time high, data center managers need to prepare themselves today to migrate to 40/100-GbE tomorrow. With increasing concerns about the cost to upgrade and the complexity involved, data center managers need a solution that simplifies the process and provides better return on investment, while meeting both current and future needs. A 24-fiber data center fiber trunking and interconnect solution is the right migration path to 40/100-GbE.

Kam Patel is business line manager for enterprise data center solutions with TE Connectivity (www.te.com).

Report eyes convergence, visibility of 10-, 40- and 100-Gbit/sec networks

A recent report produced by Enterprise Management Associates (EMA) maps out “a new visibility architecture for managing 10G, 40G and 100G networks.”

The report’s premise is as follows: Convergence is happening across IT, within network infrastructures, at network endpoints, between applications and communications, etc. The list goes on to include most aspects of infrastructure and security management, except when it comes to visibility tools.

EMA contends that application-aware packet inspection tools are an essential element in network and security operations, and are growing in popularity and number because they deliver highly granular visibility. “And yet,” the report points out, “each time one of these systems is deployed, it requires another access point, more rack space, and in most cases yet another stream-to-disk packet storage array.”

The analysis warns that while capital and operational costs of this “status quo approach” continue to mount, a major technical hurdle looms. To wit, the mainstream adoption of 10-Gbit Ethernet, with even faster 40- and 100-Gbit networks not far behind, is causing packet analysis products to either wilt under pressure or require expensive upgrades and retrofits.

The report goes on to examine how convergence can and should be applied to packet-based monitoring. Further, the analysis considers how a network visibility tools provider has addressed the challenge while bringing a scalable and compelling alternative approach to bear.

Examining current network-visibility capabilities as they relate to convergence, EMA notes, “Infrastructure engineering, security and operations professionals are doing their best to keep pace, by adapting management tools and processes in order to maintain vigilance over integrity and quality of service and application delivery ... Some of these tools are used on a constant basis while others are invoked only occasionally ... Not only are most tools themselves not ready to support the full 10G or 40G loads, but the criticality of their insights is most important when traffic volumes are at their highest.” -Matt Vincent

Ethernet Alliance gearing up for data center consolidation

The Ethernet Alliance recently announced details of its next interoperability test event, the TeraFabric Plugfest, which is scheduled to take place October 22 through 26 at the University of New Hampshire Interoperability Lab (UNH-IOL).

“Everyone understands there’s an inherent need to consolidate data center operations for the many benefits it offers, such as lowered capex and opex, unified visibility and management, and reduced maintenance complexity,” commented Henry He, marketing chair of the Ethernet in the Data Center subcommittee of the Ethernet Alliance. “But what’s less understood is how to cost-effectively do so without impacting performance.

“Ethernet’s inherent flexibility and ease-of-use make it the ideal solution for executing data center convergence strategies without affecting operational quality,” he added.

At the event, several Ethernet Alliance subcommittees will collaborate to ensure the TeraFabric Plugfest offers the most comprehensive and cohesive interoperability test experience possible, the Alliance said. Spanning five days’ time, the cross-discipline event will include a broad set of Ethernet technologies for the converged data center, such as Data Center Bridging (DCB), 10GBase-T, 40-Gbit Ethernet, high-speed cabling and Energy Efficient Ethernet.

“The majority of open standards-based testing has been highly focused on individual testing of each Ethernet technology domain,” explained John D’Ambrosia, chairman of the Ethernet Alliance. “In real-world data centers, all of these components must work together as part of a single, seamless environment. The Ethernet Alliance TeraFabric Plugfest presents a unique opportunity for the Ethernet ecosystem, as it unites a broad range of current and next-generation Ethernet components in a controlled test setting to illustrate the benefits that a converged network can bring to a data center.”

The 32,000-square-foot facility at the UNH-IOL houses a multimillion-dollar array of test equipment and devices. -Matt Vincent