New switch architectures' impact on 40/100G data center migration

From the February, 2014 Issue of Cabling Installation & Maintenance Magazine

An addendum to the ANSI/TIA-942-A standard includes emerging architectures aimed at providing lower latency and higher bandwidth.

By Gary Bernstein, RCDD, DCDC; Leviton

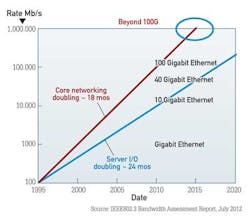

The July 2012 IEEE Bandwidth Assessment Report estimated that core networking bandwidth doubles every 18 months, with server bandwidth doubling every 24 months. As a result, current forecasts from IDC, Dell'Oro Group and Crehan Research predict that 10G servers will account for more than 50 percent of server ports this year (2014), and we are already seeing 40G servers in use.

Key data center trends driving these server upgrades include virtualization, higher density, cloud computing, lower latency requirements and mobile-device traffic. A recent market study showed that 40 percent of all servers are now virtualized, with 90 percent of all enterprises conducting some form of virtualization. Mobile device apps are also driving east-west traffic (server-to-server or storage-to-storage) instead of the traditional north-south (client-to-server).

In addition, the continual demand for faster speeds and new applications has led to the need for greater bandwidth and flatter topologies. Based on a recent report from Cisco Systems, it is anticipated there will be more than 15 billion network connections by 2015-driving continued demand for faster speeds and optimal applications. With this change in network demand and requirements, equipment architecture trends have also changed. Leading switch manufacturers have developed new switch fabrics that require the use of 40G and 100G ports. These new switches are being built with greater flexibility and higher density.

With the emergence of virtualization, cloud computing and lower-latency requirements, the Telecommunications Industry Association (TIA) published an addendum (ANSI/TIA-942-A-1) in March 2013, specifying recommendations for telecommunications cabling to support new switch fabrics. The traditional three-tier architecture, designed to support data centers over the past five years, is no longer the most ideal solution for meeting network growth. Three-tier architecture comes with a number of disadvantages, including higher latency and higher energy requirements. New solutions are needed to optimize performance.

Traditional Three-Tier Architecture

In traditional three-tier architecture, MPO (such as the MTP brand) 12- or 24-fiber cabling is often implemented between core and aggregation switches, converting to LC cabling at the access switch before SFP+ or RJ45 patching is connected to the server. The connectivity shown here is typical for a 10-GbE application

Full-Mesh Architecture

Full-mesh architecture dictates all switches be connected to every other switch. As switches are not typically in an equipment distribution area (EDA) and fabric is not used for top-of-rack (ToR) topology, full-mesh architecture is often used in small data centers and in metropolitan networks.

Full-mesh, interconnected-mesh, centralized and virtual-switch are additional examples of emerging architectures included in the ANSI/TIA-942-A-1 published standard. Like fat-tree architecture, these new switch fabric architectures provide lower latency and higher bandwidth than traditional architectures, and include non-blocking ports between any two points in a data center.

Fat-Tree Architecture

Fat-tree architecture, also called leaf-spine architecture, is one of the emerging switch architectures quickly replacing traditional solutions. Fat-tree architecture features multiple connections between interconnection switches (spine switches) and access switches (leaf switches) to support high-performance computer clustering. In addition to flattening and scaling out Layer 2 networks at the edge, fat-tree architecture also creates a non-blocking, low-latency fabric. This type of switch architecture is typically implemented in large data centers.

In the fat-tree example shown, the interconnection switch is connected directly to the access switch through the use of 12- or 24-fiber MTP trunks with either a conversion module (24-fiber) or MTP adapter panel (12-fiber). Compared to traditional three-tier architecture, you can see that fat-tree architecture uses fewer aggregation switches and redundant paths to support 40GBase-SR between the access and interconnect switches, thus reducing latency and energy requirements.

Interconnected-Mesh Architecture

Interconnected-mesh architecture, similar to full-mesh architecture, is highly scalable, making it less costly and easy to build as a company grows. Interconnected-mesh architecture typically maintains one to three interconnection switches (HDAs or EDAs) and is non-blocking within each full-mesh pod.

Virtual-Switch Architecture

Virtual-switch architecture, though similar to centralized architecture, uses interconnected switches to form a single virtual switch. Each server is connected to multiple switches for redundancy, leading to a potentially higher latency. Unfortunately, virtual-switch architecture does not scale well unless fat-tree or full-mesh architecture is implemented between virtual switches.

Centralized Architecture

In a centralized architecture, the server is connected to all fabric switches. Often managed by a single server, centralized architecture is easy to maintain with a small staff. However, port limitations can prohibit this type of architecture from scaling well. As such, like a full-mesh architecture, centralized architecture is typically used in small data centers.

With the emergence of new switch architectures comes a greater demand for flexibility in design along with the need for optimal scalability. Preterminated 12- or 24-fiber MPO/MTP cabling is ideal for supporting higher- bandwidth requirements in the data centers using these architectures. It ensures cabling design maximizes fiber utilization throughout the infrastructure. It also promotes an easy migration path to support current or future IEEE 40G and 100G bandwidth as required by ANSI/TIA-942- A-1 specifications.

New, lower-cost transceiver technology has also recently been developed to work in tandem with preterminated 12- or 24-fiber MPO/MTP cabling to support higher levels of performance and accommodate data center links longer than 150 meters. For example, new extended-reach QSFP+ transceivers can support 40GBase-SR4 beyond 150 meters. These multimode transceivers can transmit longer distances using the same cable: up to 300 meters over Om3 and 400 meters over Om4. Using preterminated MTP cabling and connectivity, Leviton performed active testing at customer data centers with Cisco and Arista switches that include these transceivers, and confirmed that 10G and 40G channels both worked at stated distances with zero errors and no lost data packets. Using extended-reach SR4 multimode optics, instead of a more-costly 40GBase-LR4 option with singlemode optics, provides customers with a lower-cost, lower-power option for distances longer than 150 meters.

In order to support new architectures, IEEE is working on new physical-layer requirements. The IEEE P802.3bm task force is developing standards to support next-generation 40/100-GbE technologies that lower costs, reduce power consumption and increase density. The P802.3bm standard is estimated to be completed in the first quarter of 2015. In addition, the IEEE 802.3 400G Study Group, formed in March 2013, established initial objectives for 400G using Om3 or Om4 fiber, and using 25G per channel- similar to proposed P802.3bm standards. The new 400G standard is estimated to be completed by 2017. These new standards developments are needed to support higher network uplink speeds. Therefore, designs should be flexible to accommodate the need for scalability.

It is critical to understand the impact of these new architectures and standards on your data center and prepare a migration strategy for moving to 40G, 100G and beyond. It is also important, when upgrading a network, to acquire assistance from experts who understand the evolution of the data center environment and fabric design architecture.

Gary Bernstein, RCDD, DCDC is senior director of product management at Leviton (www.leviton.com/networksolutions).

Archived CIM Issues