Creating and deploying a unified fabric in the data center requires consideration of today’s and tomorrow’s cabling systems.

By Kevin Komiega

Storage area networks (SANs) came to the forefront in the late 1990s as a preferred way to connect servers to external, shared storage devices. This evolution from direct-attached, local server storage to networked storage created two divergent data center networks: SANs for storage and local area networks (LANs) for application traffic.

There was a clear need for two separate networks as Ethernet protocols were, at that time, not up to the task of transporting storage traffic due to performance and latency risks and a penchant for dropping data packets.

A little more than a decade later, times and technologies are changing. Ethernet speeds are quickly surpassing those of Fibre Channel and lossless performance is paving the way for 10-, 40-, and 100-Gbit Ethernet to potentially displace Fibre Channel as the foundation for SAN networking in the data center, begging the question: What will the LAN and SAN infrastructure look like in the future?

The costs and complexity associated with operating separate network infrastructures for LANs and SANs are numerous, especially on the storage side of the data center. Fibre Channel SANs require host bus adapters (HBAs) for server connectivity, not to mention storage administrators with a completely different skill set than their network administrator counterparts.

In terms of cabling, Fibre Channel SANs employ optical fiber, coaxial copper, or twisted-pair copper cabling with speeds of 1, 2, 4, and 8 Gbits/sec.

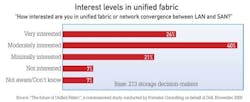

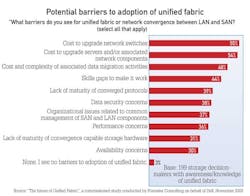

In a study conducted by Forrester Consulting for Dell last fall, 66% of storage networking decision-makers said they are either very interested or moderately interested in a unified fabric/network convergence between LAN and SAN.

The advent of 10-, 40-, and 100-Gbit Ethernet could eventually eliminate the need for separate LANs and SANs, network teams and cabling infrastructures by creating a single network fabric for LAN and SAN traffic.

Interest in a unified fabric

According to a recently released research study conducted by information technology (IT) research firm Forrester Consulting and commissioned by Dell Inc., customer interest in using 10-Gbit Ethernet as the physical network or common network protocol for storage and application network traffic – known in the industry as the unified fabric – is on the rise.

The study, which was based on a survey of 213 storage professionals in the United States, United Kingdom, China, and the Netherlands, revealed that interest in SAN/LAN convergence is high. Sixty-six percent of respondents overall said that they are very interested or moderately interested in the concept of unified fabric or SAN/LAN convergence.

In terms of storage, it remains to be seen which protocol will win-out as the de facto transport mechanism for storage traffic. The Internet Small Computer Systems Interface (iSCSI) has an entrenched installed base with Fibre Channel over Ethernet (FCoE) coming on strong as customers seek ways to connect existing Fibre Channel storage devices to the network. Regardless, the consensus is that high-speed Ethernet will serve as the underlying transport mechanism.

Cabling for the next generation

Most experts believe the separation of LANs and SANs will remain for the next several years. David Kozischek, data center market manager for Corning Cable Systems (www.corning.com/cablesystems), predicts that 40-Gbit/sec uplinks will be used to edge switches to support 10-Gbit/sec downlinks into servers within the next two years while 40-Gbit/sec servers will make their way into the network over the course of the next five years.

However, he maintains that Fibre Channel will continue to dominate in the storage realm for the majority of this decade.

“Based on history, we know that any technology that substitutes for another will take time to replace the existing one. I think both technologies will coexist in the data center for some time,” states Kozischek. “But I would start thinking now about the cabling that will be supporting [40- and 100-Gbit] networks in the future.”

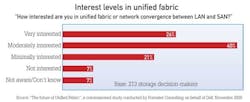

A reduction in cabling complexity was characterized as a facilities benefit in the Forrester study. That complexity reduction, alongside other facilities benefits such as a reduced footprint, was an appealing benefit to just about half the storage-networking professionals who are familiar with the unified-fabric concept.

Several data center trends are driving the need for 40- and 100-Gbit Ethernet, including server virtualization, cloud computing, and network convergence. While the aforementioned technologies can reduce the number of servers and storage devices that reside in the data center, they all increase the load on the network.

Kozischek says the increased need for more storage and faster response times will drive the need to increase network bandwidth and that avoiding bandwidth bottlenecks to storage access is of paramount concern. Couple those storage demands with the ever-increasing speeds of server processors and the proliferation of virtual servers and I/O demands are poised to skyrocket.

User should make several considerations when it comes to future cabling designs for LANs and SANs. According to Kozischek, optical loss and how well cables perform when bent and routed will be the keys to a design that can migrate from 10- to 40- and 100-Gbit Ethernet.

Other considerations for network designers include the following.

- Media options: OM3 or OM4 (multimode), or OS2 (singlemode).

- Distance: Are the links in the data center 100 to 125m, or longer?

- Network architectures: How will the cabling and hardware architectures migrate to 40- and 100-Gbit speeds? Can I run different types of logical network architectures (point-to-point, mesh, and ring) over the physical architecture I deploy today?

- Support: Data migration per established roadmaps, reliable transmission, higher density.

Kozischek believes OM3 and OM4 fiber will be the dominant choices in the data center due to lower electronics costs versus singlemode options. He says the use of parallel optic transmission will affect the types of cables needed in the data center, making polarity a “big issue.”

Distance and link-loss budgets will also become more important as data centers move to higher speeds.

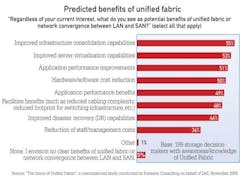

Cost concerns dominate the list of potential barriers to the adoption of a unified fabric.

“Link budgets and distance will be a function of how many crossconnects are in each link,” Kozischek says. “It will become more important to adhere to data center cabling standards like TIA-942 to ensure that architectures can migrate seamlessly from 10- to 40- and 100-Gbit Ethernet.”

More convergence of electronics can lead to less connectivity in the data center, but it also leads to lower over-subscription ratios on the optical ports, which can increase fiber count, according to Kozischek.

Today 10-Gbit Ethernet technologies are shipping, with ratification of the 40- and 100-Gbit standards tentatively slated for June 2010.

Milestones to reach

David Percival, a senior systems engineer with ADC, says all of the passive infrastructure vendors have had product available for quite some time. There is, however, one potential hurdle to the adoption of 40- and 100-Gbit Ethernet.

“The cabling infrastructure has been ready in advance of the electronics for a couple of years, but that’s typically how things work. It happened that way for Cat 6 and 6A. Looking toward the future, the only snag that could potentially occur in terms of trying to prepare for 40- and 100-Gbit would have to do with selecting your connectors for fiber, particularly for multimode fiber,” states Percival.

In their current form, MPO multi-fiber connectors, which provide multiple connectors on the faceplate to reduce overall system footprint, are an area of concern, according to Percival.

“The MPO connectors are the only [component] where there could be some technological improvements. They are currently at a place where they could potentially support these data rates, but you can always improve a native connector,” he says. “The connector is the weakest link.”

Kevin Komiega is a contributing editor for Cabling Installation & Maintenance and senior editor of InfoStor magazine, which covers storage networking.