Familiar issues, such as density, cooling, and power consumption, have forced change in the design of data center cabling systems and products.

If one were to use x-ray vision and peer through the roof of a data center, it may not be immediately evident if it was built in the 1980s or last year, because the bird’s-eye view in either case would include rows of racks and enclosures. As one moves closer to those racks, however, the 1980s-era data center would look quite different from one of today. The level of performance required, and the technology developed to meet those performance levels, have changed the way that data centers look on the inside—from the high-density connections contained within rack space to the addition of new, innovative products designed to battle the wars of cooling and energy efficiency.

“Power and cooling resource allocation has become problematic because of the demands and density of next-generation computing technology and the amount of connections those systems require inside the data center,” explains Marc Naese,solutions development manager with Panduit (www.panduit.com). The cycle is familiar to every data center manager as well as every cabling installer and technician servicing data centers. All computers produce heat, and network servers produce significant amounts of it. In even a relatively low-density data center, multiple servers will be clustered together and produce a hotspot.

Heat, cool, power cycle

In a high-density environment, servers may house extremely dense ports, and behind each port is a set of network circuitry that is the heat-producing element. These high-density servers, called blades because they pack many circuits into a thin space, allow far more network ports per rack unit than less-dense servers. Yet they produce significant amounts of heat that must be managed.

Is port density worth the resultant heat generation? In many cases, yes; real estate can be hard to come by in data centers. The matter gets more complicated,however, when we consider the systems necessary to cool the data center’s hot areas. Beyond strategically laying out a cooling infrastructure, data center managers have to be cognizant of the fact that these cooling systems consume significant amounts of electricity.

“The concerns of data center managers and designers are inter-related,” says Vinnie Jain,advanced market manager with Ortronics/Legrand(www.ortronics.com). “Higher-density installations are needed to best utilize space and handle increasing requirements for bandwidth. As density increases, heat generation goes up, and cooling—especially maintaining airflow and hot-aisle/cold-aisle separation, becomes more of a challenge.”

Couple that with the electricity the networking equipment consumes, and a great many data center managers are perpetually maintaining a balancing act between the amount of real estate and the amount of electricity available to them.

“Some data centers have real estate but not enough power; some have enough power but not enough real estate,” says Naese. “The growing demand for both is driven by changing technology and the smaller form factor servers. Customers are calculating the amount of available real estate and power to determine the most efficient deployment of compute resources to optimize their real estate and power investment to get the best return on investment. While this seems good in theory, it does not always equate to reality, especially as technology continues to evolve. For example, when you buy a new server, that server may take up less rack space but require more power than you anticipated.”

Suppliers of products and technologies for data center networks and cabling systems have developed numerous systems to accommodate these density and heat/cooling issues. This year Panduit introduced the Cool Boot Raised Floor Air Sealing Grommet, which redirects a greater amount of conditioned air toward cooling network equipment, minimizing the risk associated with overheating.

Panduit is one of several manufacturers to offer angled patch panels to make cable management more practical in areas of dense connectivity. Designing patch panels with angles has been an outgrowth of high-density patch panels—to the tune of 48 ports in a single rack unit (U) for twisted-pair copper connections, and sometimes as many as 96 connections in 1U for fiber-optic connections.

Management in focus

Such a high density of cabling connections in a single rack has brought increasing focus on data center cable management. Good cable-management practices are necessary not just so that a cabling system will look appealing, but to keep cables from impeding the path of airflow. A nest of cables that obstructs airflow will exacerbate the heat-generation/space-cooling/power-consumption quandary.

“Many times, a data center that is perceived to be energy-efficient can be totally derailed by improper cable management,” says John Schmidt, senior product manager/business development with ADC (www.adc.com). “Lower throughput of air will have multiple effects. It will raise the core temperature of active equipment, which lowers that equipment’soverall reliability.”

Schmidt adds that while schematic drawings of hot-aisle/cold-aisle setups depict a perfect world of networking-equipment alignment and passive cooling, they do not account for the reality that many data centers suffer from poor cable management. As part of a presentation that displays three examples of cable management, one called “the good,” another “the bad,” and a third “the ugly,” Schmidt states, “Bad cable management is characterized by unorganized cables. If you look under a raised floor in a badly managed installation, you will find a mess of copper and fiber-optic cables, which will reduce reliability and block the airflow of air-conditioning equipment under the raised floor.”

An alternative to the under-floor cable mess is to run cabling circuits overhead. Ortronics’ Jain explains, “Overhead cable trays and overhead patch panels can be used. It amounts to a zero-rack unit patch panel because it can be removed from the rack altogether and the cables terminatedoverhead. That reduces congestion within the rack.” Both primary cable types—twisted-pair copper and multimode fiber—can be ter-minated overhead.

The UTP version of Augmented Category 6cable, the technology designed and standardized specifically to support 10GBase-T transmission that will take hold first in data centers, is in its second generation. The primary difference between first- and second-generation Category 6A UTP is the cable’s shrinking outside diameter. Most first-generation UTP products were largerthan foiled/unshielded twisted-pair (F/UTP) Category 6A cables, and manufacturers have been working to reduce the cable’s outsidediameter. One benefit of smaller-diametercable is more efficient management (i.e., less air obstruction in the data center).

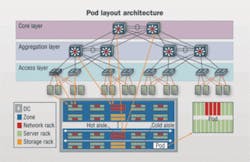

In an initiative that optimizes the physical layout ofdata centers, Panduit and networking-equipment manu-facturer Cisco Systems (www.cisco.com) have collaboratively developed a pod reference architecture. “A pod is a scalable and manageable group of network and that can be easily reproduced within your data center as it expands,” says Panduit’s Naese.

A data center design guide published by Panduitdescribes the concept of the pod and how to integrate the physical and logical network architectures together into a seamless environment. The guide states: “One way to sim-plify the design and simultaneously incorporate a scalablelayout is to divide the raised floor space into modular building blocks used in order to design scalability into the network architecture at both OSI Layers 1 and 2. The logical architecture is divided into three discrete layers, and the physical infrastructure is designed and divided into manageable subareas called pods.”

The design guide further describes two switch architectures: top-of-rack (ToR) and middle-of-row (MoR). A ToR configuration includes an access-layer switch in each server cabinet. In the MoR configuration, server cabinets contain patch fields rather than access switches, and the total number of switches used in each pod drives the physical-layer design decisions.

The design guide notes, “A top-of-rack design reducescabling congestion and power footprint, and enables a cost-effective high-performance network infrastructure. Some tradeoffs include reduced manageability and networkscalability for high-density deployments. A middle-of-row deployment leverageschassis-based technology for each row of servers to provide greater throughput, higher densities, and greater scalability throughout the data center.”

Data center managers are employing various means to achieve efficient network operation as well as energy consumption. Available options can be as comprehensive as the pod scenario, and as relatively simple as the use of smaller-diameter cable for improved management and pathway use.

This magazine has published numerous articles characterizing the data center as an ecosystem, in which one element affects and is affected by the other elements around it. As such, the use of one of the technologies described in this article does not preclude the use of some others. The data center is a complex whole, and the means by which one manages a data center can be complex as well.

Patrick McLaughlin is chief editor of Cabling Installation & Maintenance.