1000Base-X has introduced a host of testing parameters that complicate testing procedures.

Fanny Mlinarsky

Scope Communications Inc.

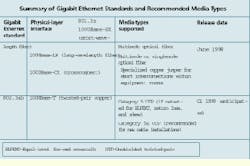

Gigabit Ethernet standards development work has been progressing steadily for the past two years. The main standard--ieee 802.3z, for fiber--was ratified last June by the Institute of Electrical and Electronics Engineers (ieee--New York City).

The standard for transmitting Gigabit Ethernet over Category 5 copper wiring is still being developed by ieee Working Group 802.3ab, with an expected release date of mid-1999. When completed, 802.3ab will pave the way for the eventual deployment of Gigabit Ethernet to the desktop over the installed base of Category 5 or Enhanced Category 5 unshielded twisted-pair cabling. Initially, however, most end-users will deploy Gigabit Ethernet in their network backbones, where optical fiber is typically the medium of choice.

The 802.3z standard defines two types of services: 1000Base-SX, operating at 850 nanometers, and 1000Base-LX, operating at 1310 nm. The standard defines the requirements for Gigabit Ethernet operation over both multimode and singlemode fiber.

Although the ieee originally intended to ratify and publish the 802.3z standard by January 1998, ratification was delayed because of the complexity of running gigabit speeds over multimode fiber. The initial goal was to support multimode-fiber drive distances up to 500 meters for campus-backbone architectures. While that distance is achievable for 50-micron multimode fiber, maximum distance limits have been revised downward for 62.5-micron multimode fiber when operating with a 1310-nm light source.

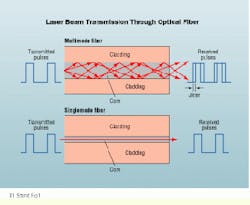

In both multimode and singlemode fiber, light propagates through the core of the fiber. Multimode fiber, with a typical core diameter of 62.5 or 50 microns, effectively couples light from low-cost light-emitting-diode (led)-based transmitters. Singlemode fiber has a core diameter of 10 microns and is best-suited for laser-based transmission. Much of the installed base of optical fiber supporting local-area-network (lan) backbones is multimode because most of the current-generation 10- or 100-megabit-per-second lan equipment is led-based.

Gigabit Ethernet, operating at 1.25 gigabits per second, is too fast for leds and requires the use of laser sources. The standard has introduced laser-based transmission over multimode fiber, and this new type of transmission has introduced new physical-layer issues.

Differential mode delay

The main issue with laser-based data transmission over multimode fiber is differential mode delay (dmd), which is the effect produced when a laser beam is launched directly into the center of the fiber`s core. Due to the constitution of the core, the laser beam splits into two or more modes, or paths, of light. The different modes are subject to different propagation delays and arrive at the receiver with a time skew, which causes jitter.

Because of its narrow 10-micron core, singlemode fiber minimizes the distortion by allowing only a single path of light to propagate. Signal attenuation, rather than distortion, is the limiting factor for singlemode transmission. For this reason, singlemode fiber can transmit light for substantially longer distances than multimode fiber.

To address the issue of dmd, 1310-nm installations can include a specialized patch cord that introduces an offset to the laser launch. This technique directs the laser beam off-center into the multimode fiber. However, even with the offset patch cord, gigabit transmission over multimode fiber is subject to strict length limitations.

Physical-layer topologies

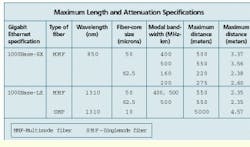

The channel length limits summarized in the table are based on a thorough study of the dmd effects. Length restrictions for multimode fiber are a function of the modal bandwidth, fiber-core size, and operating wavelength of the light source. For example, when the common 160-megahertz-kilometer, 62.5-micron fiber is used with 1000Base-SX, channel length is limited to 220 meters.

The attenuation limits listed in the table are based on the supported distance and are considerably tighter than would be tolerated by the dynamic range of the transceivers. For example, the link power budget is 7.5 dB for multimode fiber. However, the channel attenuation limits in the table are considerably lower than the link budget would suggest, since they were specified on the basis of maximum distance.

These attenuation limits are very tight compared to typical lans, and the tia TR41.8.1 fiber-optic systems task group has expressed concern that currently standardized field-testing procedures might falsely fail many acceptable fiber-optic links.

Field testing

The 802.3z standard specifies that field measurements of fiber-optic attenuation be made in accordance with the tia-526-14-A document of the Telecommunications Industry Association and the Electronic Industries Alliance (tia/eia--Arlington, VA). This specification may create a serious problem, according to the May 13, 1998 liaison letter that the tia fiber-optic working group addressed to the ieee. At issue is the fact that attenuation of fiber-optic cabling measured using an led source, as specified in tia-526-14-A, is higher than it would be using a laser as the light source. The ieee channel attenuation budget assumes losses based on laser sources.

The liaison letter states: "We are concerned that users may reject acceptable systems because of attenuation based on led test sets as established in tia-526-14-A." The tia FO2.2 task group has been asked to work on the solution to this field-testing issue. This work is not expected to begin until this month.

Channel-length measurement is the most direct and accurate method available for characterizing the headroom of Gigabit Ethernet applications over fiber. The tight attenuation limits specified in the ieee standards are based on the channel length limits. Therefore, the safest and most conservative alternative to attenuation measurement is channel length measurement. Field testers capable of measuring the length of fiber-optic installations can qualify all the different variations of 1000Base-X channels listed in the table above.

Determining standards compliance

There are seven different maximum channel-length specifications for Gigabit Ethernet over fiber-optic cabling. Testing for the proper maximum-length specification is extremely important when qualifying new or existing cabling runs for compliance with the 1000Base-X standard.

With so many different maximum distances, it can be very confusing for installers and end-users to ensure they are testing to the correct length limits. To keep track of all the various types of fiber, modal bandwidths, and maximum distances, it is recommended that installers use a test instrument that measures both optical loss and channel length on installed multimode and singlemode fiber. Ideally, the instrument should include a database of network and cabling standards, as well as specifications of various manufacturers` cable. It should also allow customization in case a particular manufacturer`s cable is not included in the database.

Calculating optical-loss budgets

Before Gigabit Ethernet, most fiber cable used in lans could pass certification with loss budgets as high as 5 or 10 dB. Depending on the fiber type, there are now six different Gigabit Ethernet attenuation limits for an installed fiber link. These loss budgets are much tighter than any other network specification--as low as 2.35 dB--and depend on the core size, the operating wavelength, and whether the fiber is singlemode or multimode.

With a traditional power and loss meter, calculating the loss budget requires manual calculations and a keen knowledge of relevant standards. Loss budgets are determined by the number of connectors, the allowable loss per connector, the length of the fiber, and the allowable loss per kilometer of fiber for each particular wavelength. Today`s advanced fiber-certification tools automate this complex process by calculating the correct loss budgets and comparing them to the measured test data to determine a "pass" or "fail" result for each fiber at each wavelength tested.

Fanny Mlinarsky is vice president of engineering at Scope Communications Inc. (Marlborough, MA), a division of Hewlett-Packard. Scope Communications manufactures the WireScope 155 tester for testing copper and fiber-optic cabling.

When a laser beam is transmitted through multimode fiber, its large core permits multiple paths of light to arrive over a range of propagation delays, causing jitter (top). In contrast, the narrow core of singlemode fiber permits just a single path of light to propagate, so jitter is not a problem (bottom).