Federal government involvement is driving improved practices for more-efficient operation.

by Patrick McLaughlin

If anybody ever joked that it would take an act of Congress to rein in the mushrooming amount of energy consumption in data centers, the joking stopped on December 20, 2006, when Congress enacted Public Law 109-431: An Act to Study and Promote the Use of Energy Efficient Computer Servers in the United States.

Brief by lawmaking standards, the Act directed the Environmental Protection Agency (www.epa.gov), through its Energy Star program, to study and report back to Congress its findings “analyzing the rapid growth and energy consumption of computer data centers by the Federal Government and private enterprise.”

The EPA’s directive included nine points to be studied, and gave the agency 180 days in which to report back. The EPA released a draft report in April before returning the full report, a 130-page document formally titled “Report to Congress on Server and Data Center Energy Efficiency,” dated August 2 (available at www. energystar.com). In a press release, the EPA stated that its report “shows that data centers in the United States have the potential to save up to $4 billion in annual electricity costs through more energy efficient equipment and operations, and the broad implementation of best management practices.”

Among the notable findings:

- Data centers in the U.S. consumed approximately 60 billion kilowatt-hours (kWh) in 2006-roughly 1.5% of the nation’s total electricity consumption.

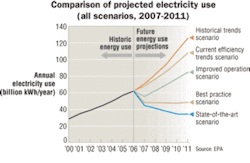

- Servers’ and data centers’ energy consumption doubled over the most recent five years, and is expected to nearly double in the next five to more than 100 billion kWh, when the total consumption will cost approximately $7.4 annually.

- Existing technologies and strategies could reduce typical server energy use by approximately 25%, while advanced technologies could effect further reductions.

Three levels of enhancement

After detailing the reasons it believes data center energy consumption will continue to grow rapidly over the next five years, as well as the implications of that increased consumption, the EPA report turns its attention to opportunities for energy efficiency. Specifically, the report defines and describes three scenarios-“improved operation,” “best practice,” and “state of the art”-that can incrementally increase efficiency.

Improved operation efforts, which the EPA says require little or not capital investment, include continuing the current trend toward server consolidation; eliminating unused servers; adopting energy-efficient servers to a modest level; enabling power management on all applicable servers; and assuming a modest decline in energy use of enterprise storage equipment. Under this scenario, the EPA says sites will gain a 30% improvement in infrastructure (power and cooling) energy efficiency from improved airflow management.

Best practice efforts include the measures taken in the “improved operation” scenario, but with moderate server consolidation, aggressive adoption of energy-efficient servers, and assuming moderate storage consolidation. Additionally, best-practice efforts call for implementing improved transformers and uninterruptible power supplies; improved efficiency chillers, fans, and pumps; as well as free cooling. Following these procedures will garner up to 70% improvement in infrastructure energy efficiency, the report says.

State-of-the-art efforts include aggressive server and storage consolidation, as well as enabling power management at the data center level of applications, servers, and equipment for networking and storage. Those steps plus direct liquid cooling and combined heat and power will yield up to 80% improvement in infrastructure energy efficiency, the report states.

The EPA stressed that these descriptions are not comprehensive, but rather representative of a subset of energy-efficiency strategies that could be employed.

Practical implications for cabling

Clearly, there is no silver-bullet single action that data center managers can take to improve their systems’ energy efficiency. The interdependence of data center systems on one another rings true in attempts at energy efficiency, in much the same way it does with respect to data transmission.

Dr. Robert Schmidt of IBM is credited with first describing the data center as an ecosystem, and the terminology has attracted many followers. “A data center is made up of many components, including the room itself, the floor structure-raised or not raised, pressurized or not pressurized-and cable routing, whether it is overhead or underfloor,” says Herb Villa, technical manager with Rittal Corp. (www.rittal.com). “Add to that the overall building systems like lighting, security, enclosures, and the components in those enclosures. All of these components and systems affect the performance of something else.”

Villa continues, “No data center component is an island. All must be viewed as an entire system. None can be completely valued independently.” To that end, he says, often data center sites have different personnel in charge of different components: “Sometimes, the group responsible for the network cabling and switches is not the same group that is responsible for the servers.”

If all these systems are going to work together, Villa adds, it is essential to get the personnel running them together. Today, that’s often the case. “It used to be that when I would go to a customer, I would talk to the IT personnel,” Villa says. “Today, I talk to facilities personnel-plumbing, electricians, and others. Everyone is on the same page much earlier in the process than in the past.”

Marc Naese, solutions development manager with Panduit (www.panduit.com), says, “Overall, we see four critical areas of the data center: the entire infrastructure, network components, storage components, and computing resources. All four areas must interoperate.” Naese adds, “We have developed our solutions to address specific issues in each of those areas. On a room level, cooling supports the entire ecosystem. As you get down to a rack or cabinet level, the needs change drastically. Side-to-side versus front-to-back airflow is an example.”

Naese concludes, “Before you can understand your power or cooling requirements, you must know how many servers you are going to deploy. From there, you can calculate what your requirements will be, what the cabinets will look like, and how dense those cabinets will be.”

Keep them separated

Ian Seaton, technology marketing manager with Chatsworth Products Inc. (www.chatsworth.com), points out, “When you follow industry understanding of best practices, everything that is done in the data center is really designed to separate the supply air from the return air as much as possible. That’s why, he explains, the hot-aisle/cold-aisle setup was established: “It’s why you seal off access cutouts, install blank tiles, and locate your cooling units in the hot aisles so you prevent your return-air path from migrating into the cold-aisle space.”

Seaton adds, “If you look at a data center’s entire cooling system as an ecosystem, by virtue of maintaining complete isolation between supply air and return air, you can allow the supply air to be raised in temperature to equal the delivered air temperature. Most want the equipment to see air between 68° and 77°. To get that temperature, your supply air is typically delivered in the 52° to 55° range. Follow the line of your cooling system, and you’ll find the chilled-water temperature coming off the condenser is in the low 40s. When you eliminate mixing [of supply air and return air], you can raise your supply air from 52° to 72°, in which case the chilled-water temperature can be 60°.”

Adopting the data-center-as-ecosystem concept may be necessary for managers to achieve the energy efficiencies put forth in the EPA’s report to Congress. And while cabling infrastructure, including racks and cabinets, might play only a small part, the ecosystem mentality dictates that each part affects others.

Next month, we will delve further into the EPA report and discuss the activities of The Green Grid, an energy-consumption-conscious consortium in the IT industry

PATRICK McLAUGHLIN is chief editor of Cabling Installation & Maintenance.