Preparing for 100–GbE in the data center

Fiber performance and connectivity technology will be key factors in supporting 100–Gig networks.

by Jennifer Cline

With the continued requirement for expansion and growth in the data center,cabling infrastructures must provide reliability, manageability, and flexibility. Deployment of an optical connectivity solution allows for aninfrastructure that meets these requirements for cur–rent applications and data rates.

Scalability is an additional key factor when choosing the type of optical connectivity. Scalability refers not only to the physical expansion of the data center with respect to additional servers, switches, or storage devices, but also to the infrastructure to support a migration path for increasing data rates. As technology evolves and standards are completed to define data rates, such as 40– and 100–Gbit Ethernet, Fibre Channel data rates of 32 Gbits/sec and beyond, and Infiniband, today's cabling infrastructures must provide scalability to accommodate the need for more bandwidth in support of future applications.

With the rising demand to support high bandwidth applications, current data rates will not be able to meet the needs of the future. And with Ethernet applications currently operating at 1 and 10 Gbits/sec, it is clear that to support future networking requirements, 40– and 100–Gbit Ethernet (GbE) technologies and standards must be developed.

Multi–faceted drivers

Many factors are driving the requirement for higherdata rates. Switching and routing, as well as virtualization, convergence, and high–performance computing environments, are examples of where these higher network speeds will be required within the data center environment. Additionally, Internet exchanges and service provider peering points and high bandwidth applications, such as video–on–demand, will drive the need for a migration from 10–GbE to 40– and 100–GbE interfaces.

In response to the aforementioned drivers, the Institute of Electrical and Electronics Engineers (IEEE; www.ieee.org) formed the IEEE 802.3ba task group in January to address and develop guidance for 40– and 100–GbE data rates. The project authorization request (PAR) objectives included a minimum 100–meter distance for laser–optimized 50/125–µm multimode (OM3) fiber. OM3 fiber is the only multimode fiber included in the PAR.

Corning has conducted a data center length distribution analysis that shows 100 meters represents acumulative 65% of deployed OM3; the expectation isthat the 40– and 100–GbE distances over OM3 fibermay be extended beyond 100 meters to addressadditional data center structured cabling length requirements. Completion of the standard is expected by mid–year 2010.

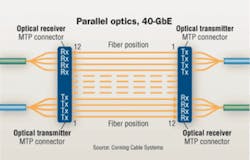

At the IEEE meeting in May, several baseline proposals were adopted to establish a foundation for generating the initial draft of the 40– and 100–GbE standard. Parallel optics transmission was adopted as a baseline proposal for 40– and 100–GbE over OM3 fiber. Compared to traditional serial transmission, parallel optics transmission uses a parallel optical interfacein which data is simultaneously transmitted and receivedover multiple fibers.

This baseline proposal defines 40– and 100–GbE interfaces as: 4 x 10 Gigabit Ethernet channels on four fibers perdirection, and 10 x 10 Gigabit Ethernet channels on 10 fibersper direction, respectively.

The operating distance defined within this proposal is100 meters—the same as the minimum objective stated in the PAR. Additionally, the connector loss allocation in this proposal is 1.5 dB for the total connector loss within the channel.

Based on customer surveys, it is believed that the 100–meter distance defined in the IEEE 802.3ba PAR may not account for a large number of the structured cabling distances found in the data center. To address this issue, an ad–hoc group is investigating methods of extending the reach of the 40– and 100–GbE interfaces over OM3 fiber. While the group is exploring extended distances up to 250 meters, distances over OM3 fiber will likely not extend beyond 150 to 200 meters.

Cabling performance requirements

When evaluating the performance needed for the cabling infrastructure to meet the future requirements for 40– and 100–GbE, three criteria should be considered: bandwidth, total connector insertion loss, and skew. Each of these factors can impact the cabling infrastructure's ability to meet the standard's proposed transmission distance of at least 100 meters over OM3 fiber; additionally, with ongoing studies to extend this distance, performance can become even more critical:

• Bandwidth. OM3 fiber has been selected as the only multimode fiber for 40/100–Gbit consideration. The fiber is optimized for 850–nm transmission and has a minimum2,000 MHz·km effective modal bandwidth. Fiber band–width measurement techniques are available that ensure an accurate measurement of the bandwidth for OM3 fiber.Minimum effective modal bandwidth calculated (EMBc) is a measurement of system bandwidth for OM3 fiber thatoffers the most desirable and precise measurement, compared to the differential mode delay (DMD) technique. With minEMBc, a true, scalable bandwidth value is calculatedthat can reliably predict performance for different data rates and link lengths. With a connectivity solution using OM3fiber that has been measured using the minEMBctechnique, the optical infrastructure deployed in the data center will meet the performance criteria for bandwidth set forth by the IEEE.

• Insertion loss. This is a critical performance parameter in current data center cabling deployments. Total connector loss within a system channel impacts the ability of a system to operate over the maximum supportable distance for a given data rate. As total connector loss increases, the supportable distance at that data rate decreases. The currently adopted baseline proposal for multimode 40– and 100–GbE transmissions states a total connector loss of 1.5 dB for an operating distance up to 100 meters. Thus, you should evaluate the insertion–loss specifications of connectivity components when designing data center cabling infrastructures. With low–loss connectivity components, maximum flexibility can be achieved with the ability tointroduce multiple connector matings into the connectivity link.

• Skew. Optical skew—the difference in time of flight between light signals traveling on different fibers—is an essential consideration for parallel–optics transmission. With excessive skew, or delay, across the various channels, transmissionerrors can occur. While the cabling skew requirements are still under consideration within the task force, deployment of a connectivity solution with strict skew performance ensures compatibility of the cabling infrastructure across a variety of applications. For example, Infiniband, a protocol usingparallel–optics transmission, has acabling skew criteria of 0.75 ns. When evaluating optical cabling infrastructure solutionsfor 40– and 100–GbE applications, selecting one that meets the skew requirement ensures performance not only for 40– and 100–GbE, but for Infinibandand future Fibre Channel data rates of 32 Gbits/secand beyond. Additionally, low–skew connectivitysolutions validate the quality and consistency of cable designs and terminations to provide long–term reliable operation.

Deploying in the data center

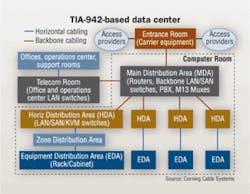

Recommended cabling infrastructure deployments in the data center are based upon guidance found in the TIA–942 Telecommunications Infrastructure Standard for Data Centers. A star topology in a structured cabling implementation provides the most flexible and manageable infrastructure. Many data center deployments use the reduced topologydescribed in TIA–942, in which Horizontal Distribution Areas are collapsed to the Main Distribution Area (MDA). In this collapsed architecture, the cabling is installed between the MDA and the Zone or Equipment Distribution Areas.

For optimized performance in meeting data center requirements, the topology ofthe cabling infrastructure should not be selected alone; infrastructure topology and product solutions must be considered in unison.

Cabling deployed in the data centermust be selected to provide support forhigh–data–rate applications of the future, such as 100–GbE, Fibre Channel, and Infiniband. In addition to being the onlygrade of multimode fiber to be included inthe 40– and 100–GbE standard, OM3 provides the highest performance for today's needs. With an 850–nm bandwidth of 2,000 MHz·km or higher,OM3 fiber provides the extendedreach often required for structuredcabling installations in the data center. OM3 fiber connectivity continues to offer the lowest price infrastructure and electronics solution for short–reach applications in the data center.

In addition to performance requirements, the choice of physical connectivity is also important. Because parallel–optics technology requires data transmission across multiple fibers simultaneously, a multifiber or array connector isrequired. Using MTP–based connectivity in today's installations provides a means to migrate to this multifiberparallel–optic interface when needed.

Factory–terminated MTP solutions allow connectivity through a plug–and–play system. To meet the needs of today's serial Ethernet andFibre Channel applications, MTP–terminated cabling is installed into preterminated modules, or cassettes. These modules provide a means for transitioning the MTP connector on the backbonecable to single–fiber connectors, such asthe LC duplex.

Connectivity into the data center electronics is completed through a standard LC duplex patch cord from the module. When the time comes to migrate to 40– or 100–GbE, the module and LC duplex patch cords are removed and replaced with MTP adapter panels and patch cords forinstallation into parallel–optic interfaces.

Multiple loss–performance tiers are available for MTP connectivity solutions. Just as connector loss must be considered with such current applications as Fibre Channel and 10–GbE, insertion loss willalso be a critical factor for 40– and 100–GbE applications. For example, IEEE 802.3 defines a maximum distance of 300 meterson OM3 multimode fiber for 10–GbE (10GBase–SR). To achieve this distance, a total connector loss of 1.5 dB is required. As the total connector loss in the channel increases above 1.5 dB, the supportable distance decreases. When extended distances or multiple connector matings are required, low–loss performance modules and connectivity may be necessary.

Additionally, to eliminate concerns of potential modal noise effects with totalconnector loss increases, solutions should have undergone 10–GbE system modal noise testing by the connectivity manufacturer. Selecting a high–quality connectivity solution that provides low insertion loss and eliminates modal noise concerns ensures reliability and performance in thedata center cabling infrastructure.

MTP–based solutions

With inherent modularity and optimization for a flexible structured cabling installation, MTP–based OM3 optical–fiber systems can be installed for use in today's data center applications while providing an easy migration path to future higher–speed technologies, such as 40– and 100–GbE.

JENNIFER CLINE is market manager for private–network data centers with Corning Cable Systems (www.corningcablesystems.com).