Optical solutions for the collocation data center

Making the case for fiber and certain fiber connection interfaces in high-density data center environments.

David Eckell, Corning Cable Systems

We read about cloud computing, software as a service (SaaS) and managed information technology (IT) services, among others, and find that the term “collocation data center” is common among these services. But what exactly is a collocation data center? At its core, it is a common meet-point for businesses to interconnect with telecommunications and network service providers. The facility leases cage space to end users that populate the space with their hardware and software, and manage it. Some facilities provide less-expensive in-row collocation services with lockable cabinets as well. The data center portion of the facility provides value-added outsourced services for businesses by supplying the hardware, software and the IT support staff.

Of major concern in these facilities is the capability of the physical cable plant to handle future needs as well as today’s requirements. The answer to this concern involves a complete view of capabilities, capital expenditures, operation expenses, green initiatives and whether it provides flexibility for moves, adds and changes, while adhering to industry standards. But first, let’s explore the drivers behind the need for this type of facility.

Today’s telecommunications infrastructure is seeing the traffic growth that was expected in the late 1990s and early 2000s. The Wall Street Journal reported in March in its Data Hosting and Data Storage Report that analysts are very encouraged by the data hosting space as well. They see strong trends for data hosting based on current demand and future needs with customer-facing, front-end and back-end solutions. They cited the increased consumption of applications and video content with smartphones as a driver in this space.

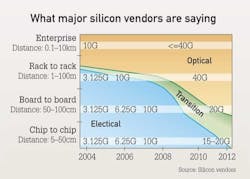

According to silicon vendors, networking equipment is trending toward all-optic devices and the transition will be largely complete within a few years.

SaaS and clouds mean business

A few key drivers for outsourced data hosting and storage involve cloud computing and services like SaaS. SaaS is an application delivery model in which an instance of software is hosted by the collocation data center and is used by the end user. The end user may be billed through subscription or with a pay-as-you-go model. This may sound like an application service provider (ASP) model, but there is a key difference. SaaS is a multi-tenant application that may be provisioned quickly with an automated front end. ASP, on the other hand, requires manual intervention to have an end user up and running. SaaS provides considerable cost savings for the end user and mitigates software service level agreements while enabling the collocation data center manager to scale their offerings with less manual interaction.

Cloud computing is a model that delivers a variety of virtual services, much like SaaS, to a geographically diverse group of end users. A cloud computing facility aggregates the common features found in a data center, such as power, cooling, physical plant and networking equipment, and spreads those costs across hundreds and thousands of users throughout the year. It provides the end user with massive scalability, access to high-end switching equipment, reliability, disaster recovery and cost savings.

This is a good-news story for today’s data center collocation managers. Over the next few years, even if bandwidth use is conservative, the demand for collocation data center servers and storage will increase. However, in order to adequately meet this demand curve, decisions regarding legacy and existing telecommunications infrastructure will need to be addressed. Questions will surface regarding physical media, design considerations, latency, effects on capital expenditures and operation expenditures incurred to support the physical plant over the long term. A solid approach to designing and refreshing collocation data centers is necessary.

Media choices and TCO

Media choice is the first consideration in a new-build or refresh. This decision significantly impacts the total cost of ownership (TCO) of your data center and, ultimately, the margins that will be generated. At times, it may seem like a sound course of action to continue with legacy copper media types as there is a comfort level due to skill set or familiarity; however there are several key reasons to migrate to an optical physical plant.

First, the industry is clearly following an optical path. As seen in the figure titled “What major silicon vendors are saying,” manufacturers of telecommunications networking equipment are trending toward all-optical devices. Network equipment in the enterprise backbone operating at 10 Gigabit Ethernet (GbE) became all-optical prior to 2004. In the period since, the optical trend has been increasing within the horizontal as data rates move from 1 to 10 GbE. As data rates increase to 40 GbE and beyond, the landscape becomes all-optical in the horizontal. There is no existing guidance from the Institute of Electrical and Electronics Engineers (IEEE; www.ieee.org) for using 40/100 GbE unshielded or shielded cabling, and the trend is that it will not be included in the future. This significantly impacts the TCO as a completely copper backbone would need to be replaced entirely to support 40/100 GbE, while existing OM3 and OM4 fiber-optic physical plants are well-equipped to handle the migration.

While 40 Gbits/sec is available now for InfiniBand and will be available for Ethernet in 2011, some data center managers see a migration to 40G far in the future, leading them to believe that a legacy copper solution is warranted. However the total cost of doing so throughout the lifespan of the physical plant can be considerable. The first consideration is power consumption. A typical 10G copper PHY chip consumes six to eight watts of power, while the typical optical SFP+ transceiver consumes 0.5 watts of power. A dual-port 10G copper network interface card (NIC) on a server card weighs in at 24 watts with the optical SFP+ NIC averaging 9 watts. This allows network equipment manufacturers to provide optical switches with three to six times the density of current copper-based switches.

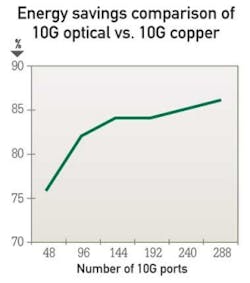

Added to the cost for the additional power is the need for appropriate cooling. According to the Environmental Protection Agency (EPA), each unit of additional network power must be complemented with an equal unit of cooling. For example, a 288 10G optical port has 86% enhanced energy efficiency compared to 10G copper, resulting in significant cooling savings over a copper solution. According to researcher IDC (www.idc.com), some estimates blame up to 60% of data center downtime on heat-related issues. With service level agreements (SLAs) and the potential for liquidated damages, heat-related risks are simply not worth taking. As seen in the energy-savings chart, as port count increases in the data center, there is significant energy savings in using a fiber-based solution over a UTP-based solution.

The added benefit of reducing power and heating/ventilation/air-conditioning (HVAC) consumption is a greener facility. Each kilowatt-hour consumed results in 1.6 pounds of CO2 emissions. By using fiber optics, data centers use less power at both the networking level and cooling level. Because they are using less power, they reduce their carbon footprint and their costs. As elements of cap-and-trade or other governmental controls are initiated, those facilities with copper-based infrastructures may face considerably more costs to offset their carbon footprints.

Is copper the fragile medium?

An additional cost to consider when installing the physical plant is the fragile nature of Category 6 and 6A media. As Category 6 and 6A cables have advanced, they have become increasingly susceptible to noise and alien crosstalk. While power-intensive digital signal processing on the line card assists in reducing deterministic noise, the physical media must protect itself against alien crosstalk through tight twists and narrow tolerances for physical separation of copper pairs.

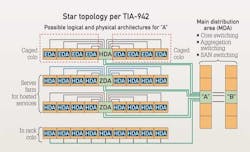

This schematic shows a TIA-942 standard-based layout of a collocation data center.

It is a curious statement to say that in some respects, the high-data-rate copper plant is more fragile than fiber-optic cable, but it is. For example, in order to maintain protection against alien crosstalk, a Category 6A cable has a maximum pulling tension of 25 pounds. A typical fiber-optic cable found in the horizontal has a 100- to 300-pound maximum pulling tension, depending on cable type. As a result, more extensive testing is required to certify copper media, which requires elaborate test equipment. However, fiber-optic testing in high-data-rate environments is fast and cost-effective, which helps to reduce the total cost of the project as well as the time to install the physical plant.

At this point, the data center manager understands that fiber-optic cable has significant operational and financial advantages over copper UTP/STP cable media at 10G. The question is how to address the needs of the data center collocation facility. The data center manager must meet the same reliability, performance and scalability requirements that all data centers demand, but they must provide these requirements for numerous and sometimes very different customers, all within the same facility and all at a quick deployment velocity. The key to being able to provide a scalable solution that meets today’s needs and is ready for the migration to 40 GbE parallel optics is a high-density fiber-optic solution based on the TIA-942 Telecommunications Infrastructure Standard for Data Centers.

Standards-based designs

This standard was designed with speed and flexibility in mind. As the diagram shows, the design is based on a star topology using a main distribution area (MDA) as its focal point. It is from here that the data center manager establishes a main crossconnect that can easily manage moves, adds and changes to facilitate growing customer needs. The MDA feeds several areas within the facility and can do so in different ways. The advantage is that it helps to create an efficient infrastructure. In a typical data center configuration, the MDA houses the core, aggregation and storage area network (SAN) switching. In order to do this effectively, high-density fiber-backbone cabling with preterminated MPO-style connectors should be used. The benefits include reduced congestion in pathways and spaces, improved airflow and higher-density patching areas.

In the diagram, the horizontal distribution area (HDA) at the cage is performing the traditional function as a crossconnect, housing the row’s switching equipment. From here horizontal cabling terminates in the equipment distribution area (EDA). However, the industry is moving to a top-of-rack topology in preparation for parallel optics, so this pushes the HDA to each rack.

This configuration is seen in the bottom row with edge switches distributed to the top of each rack and terminated with point-to-point fiber-optic uplinks back to the MDA. The problem with this scenario is that the home runs back to the MDA from each HDA do not take advantage of the density found in fiber-optic cabling. An additional option being reviewed by TIA/EIA is the addition of a zone distribution area (ZDA) between the MDA and HDA as seen in the middle rows. Here high-density backbone cabling extends from the MDA to a ZDA and terminates with lower-density fiber-optic cabling at the HDA in each rack, which helps further improve congestion in the spaces and pathways.

With the higher-density MPO-style connectors and advances in bend-optimized fiber-optic technology, it is now possible to terminate more than 2,300 fibers in a 4U rack space. It would take 127U to accommodate the same number of copper terminations. Providing this level of density allows the data center manager to build the backbone in anticipation of customer needs, while leaving the short runs from the ZDAs to be installed quickly and cost effectively.

As we have seen, the trend for data consumption is rising at a tremendous rate. The value of each rack space is increasing as businesses opt to outsource their hosting, storage and application needs. In an effort to cut costs and improve densities within the facility, fiber-optic media is the clear choice. In order to provide an infrastructure that will meet the demand of today as well as the requirements of the future, it is critical to employ an MPO-style preterminated fiber-optic solution with bend-optimized fiber. This will significantly improve patching density, minimize congestion in the pathways and spaces and provide the necessary migration path to parallel optics that facilitates 40 GbE and beyond.

David Eckell is systems engineer with Corning Cable Systems (www.corning.com/cablesystems).