Keeping your cool when the racks heat up

Enclosure manufacturers are building technologies into their equipment to help cool the contents.

In distributed data-communication networks, such as those typically found in commercial office buildings, heat loads have long required some type of thermal management. And enclosure manufacturers have responded for years.

"Many of our products have been used to house audio and video equipment in industrial environments, which often subject the equipment to continuous high temperatures," explains Robert Schluter, president of Middle Atlantic Products (www.middleatlantic.com). One approach that Middle Atlantic takes is to ventilate front and rear doors, as well as the tops of enclosures. But it's not as simple or straightforward as it may sound. "It's more than just randomly punching holes in doors," he says. "The placement of holes must be scientifically based to enhance the convection process that's already taking place."

Like several other enclosure makers, Middle Atlantic offers fans and vent panels as options to its enclosure products. The object is to keep air flowing through the enclosure to help keep the temperature inside from getting too elevated.

Data centers heat up

Conditions are significantly more intense, and solutions logically more sophisticated, inside data centers. Server-packed enclosures wreak havoc on air-conditioning systems, and the compaction of server technology is the cause. "Technology has afforded greater processing capabilities in smaller spaces," says Kevin Macomber of APW Wright Line (www.wrightline.com). "Servers are flatter and deeper than they used to be. Servers that occupy 1U of rack space are readily available today." The other factor, Macomber adds, is that the power supplies required inside these servers have increased. The need for redundancy inside data centers contributes also.

Considering that many enclosure systems can hold 42 units of rack space, the potential exists for a significant amount of heat-generating equipment to reside in a single cabinet. Assume a cabinet has a heat load of 5,000 watts [W]," says John Consoli, vice president of AFCO Systems (www.afcosystems.com). "Dissipating that heat requires the same amount of energy needed to cool a 1,500-square-foot room on a 95° day."

Sanmina-SCI (www.sanmina-sci.com) has stated that "in a traditional data center, it takes 0.6W of air conditioning to remove the heat generated by 1W of computing power." The company also notes that the 20-MHz 80386 chip-the best of its breed in the mid-1980s-needed 1W of input energy. The 800-MHz Pentium III requires 75W of input energy.

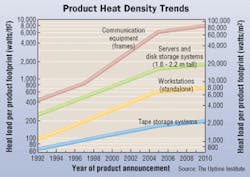

What can be done about such enormous heat loads? That question has triggered something of a cottage industry aimed at finding answers. Several of the enclosure manufacturers offering built-in cooling technology refer to information provided by The Uptime Institute (www.uptimeinstitute.org), a Santa Fe, NM-based organization that provides research, information, and services for data-center and server-farm environments. It characterizes itself as a user-based group comprising mostly Fortune 500 companies, and counts technology heavyweights BellSouth, Sprint, Verizon, Hewlett-Packard, Microsoft, and Sun Microsystems among its members.

Thermal management issues

The group has published several white papers specifically addressing thermal management, with titles including "Heat Density Trends in Data Processing, Computer Systems, and Telecommunica tions Equipment," "Changing Cooling Requirements Leave Many Data Centers at Risk," and "Alternating Cold and Hot Aisles Provides More Reliable Cooling for Server Farms."

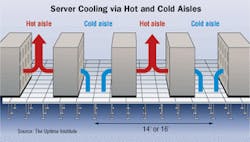

The Institute's Robert F. Sullivan developed the hot aisle/cold aisle concept 10 years ago, and describes the concept in his white paper. Essentially, a cold aisle has a perforated floor to allow air to come up from the underfloor plenum. Equipment racks are facing each other, allowing the air from the plenum to cool the equipment, then be sent out the back of the equipment to the hot aisle. The neighboring hot aisle does not have perforated tiles.

"This system is not perfect," Sullivan writes, "because some equipment manufacturers bring cooling air in from the bottom of their equipment and exhaust out through the top and/or sides. And some equipment is designed to bring air in from the top and exhaust out the bottom."

Redefining air flow

Enclosure makers are doing their part to address these thermal-management issues. In many cases, the solutions, like the sources of the problems, are rather sophisticated.

Vendors such as AFCO Systems and APW Wright Line combine technology with air flow to yield cooling measures designed to accommodate high-density server clusters. AFCO's Kool-IT cabinet, launched in 1995, has thermostatically driven fans on top.

"From its conception, the product was designed to be integrated with air conditioning systems," Consoli says. "We developed the product real-time in a user's data center. It's hot inside cabinets, and the answer is not to make the room cooler."

Kool-IT is on its fourth generation, and now offers users the capability to dial-in a temperature threshold at which the fans will run at full capacity. Other features include redundant power cords and remote-monitoring capability.

"The original Kool-IT turned the fans on, then when the desired temperature was reached, the fans shut off," Consoli explains. "Now, the fans are speed-controlled. They continuously run at about 20% of capacity, then go to optimum capacity when more cooling is needed." He explains that while it is common knowledge that continuous, excessive heat is hazardous to servers, it is less commonly known that cycles of excessive heat, followed by cool, followed again by excessive heat also can be damaging.

Wright Line considers its Tower of Cool to be an integrated part of a data center's air conditioning system. The manufacturer says that Tower of Cool, introduced this spring, "takes a holistic building approach to equipment cooling inside an enclosure."

The enclosure includes built-in fan trays with a perforated cover, providing a uniform air supply to equipment inside the enclosure, and exhaust fans directly above the rear door that remove heat evenly from top to bottom.

"Previously, all users had to address was some level of air volume to cool up to 4kW," says Macomber. "Now, they must control the direction of air, and the exhausting of heat."

"The Tower of Cool makes the HVAC system work more productively," explains Todd Schneider, Wright Line's director of marketing. "So-called 'hot spots' are eliminated and using the product can reduce HVAC needs by as much as 50%. Generally about 28% of the energy used in a data center is used by the HVAC system. So, this product can reduce cooling-plant energy use by as much as 14%."

Neither AFCO Systems nor Wright Line "went it alone" in developing a cooled enclosure for the data center; both worked with engineering firms. AFCO teamed up with Jack Dale Associates (www.jackdale.com), which defines fluid dynamics of raised-floor cooling systems, and Wright Line worked with data-center design firm RTKL Associates (www.rtkl.com). Each of the engineering firms conducted analysis and published technical papers on their findings and approaches to in-cabinet cooling.

Water-chilled systems

Some vendors have embraced water-chilled systems as the answer to in-cabinet heat problems. Enclosures that include water-chilled cooling systems are available from Sanmina-SCI (www.sanmina-sci.com) and Rittal (www.rittal.com). Sanmina-SCI calls its system EcoBay.

"The amount of heat that can be removed by water is roughly 3,500 times that which can be removed by the same volume of air," write Sanmina-SCI business development manager Peter Jeffery and engineer Tony Sharp in a positioning paper. EcoBay uses bore pipes carrying chilled water. The cooling module is compartmentalized below the cabinet bays. All active components of the cooling system are isolated from the equipment-mounting section of the system. The system is available as an assembly of two or four enclosed cabinets with an integral cooling module, power-distribution units, and a Web-based monitoring system.

In short, Sanmina-SCI says this approach is an alternative to dumping hot air into the data-center environment. Jeffery said he expects that by the fall the company will have a prototype of a system that uses a gaseous mix rather than water to effect enclosure cooling. Also coming, he says, are an internal fire-suppression system and additional monitoring options.

"I don't see a water-chilled system replacing room cooling," says Rittal's Phil Dunn from the exhibit floor at the SuperComm trade show, where he was displaying Rittal's water-chilled enclosure. "It will augment the room air-conditioning system."

Like the Sanmina-SCI system, Rittal's system allows for drip-free disconnects.

"With today's server-density trends and the move toward blade servers," Dunn continues, "we could approach 18 kW of energy in a 42-U enclosure in five years."

Indeed, what lies ahead for server density is a wild card. Sanmina-SCI's Sharp states that perhaps-just perhaps-servers will do a better job of cooling themselves in the future, thanks to improved chip technology. "We have a long way to go until then," he says. In the meantime, data-center managers have some new options from enclosure manufacturers to help in their endless efforts at thermal management.

Patrick McLaughlin is chief editor of Cabling Installation & Maintenance.

Seminar addresses thermal management

The Uptime Institute (www.uptimeinstitute.org) will host a seminar, "High Density Cooling for Future Generations of IT Hardware," October 30 through November 1 in Houston, TX.

The seminar will cover data-center cooling issues in significantly greater depth than space allows in this article. Topics will include:

- Heat density trend projections of 15 computer manufacturers;

- Cooling roadmap for the computer room of the future;

- Back to water cooling?;

- The simple arithmetic required for proper raised-floor cooling planning;

- Principles for laying out computer hardware and cooling units.

More information on the seminar is available at The Uptime Institute's Web site.