Metrics and efficiency the future of data centers

by Patrick McLaughlin

The keynote address at a data center conference outlined the causes of, and pointed toward cures for, power-consumption woes.

Attendees at the Data Center World conference and exhibition in late March had the opportunity to hear presentations and visit product displays focusing on the cabling systems, structured or unstructured, that support data-center operations.

But cabling was far from the only topic on display and under discussion among this group of data-center professionals. As is the case in most data-center conversations today, the themes of power and cooling had a significant presence at this semiannual event.

Organized each spring and fall by AFCOM (www.afcom.com), Data Center World is a gathering of data center administrators. The spring event, held March 25-29 in Las Vegas, NV, included technical sessions broken into sections that included best practices, data center management disaster recovery, facilities management, and security; it also included classroom-style product presentations and an exhibit hall with more than 100 exhibitors displaying their data-center-specific products and services.

“Your job is truly exciting,” said Jill Eckhaus, AFCOM president, in her opening address. “The data center is the lifeline of every organization, and it is your responsibility to sustain its existence through efficient planning, best practices, and in many cases, by thinking on your feet.”

Following Eckhaus’ comments, Christian Belady, PE, distinguished technologist with HP (www.hp.com), delivered the conference’s keynote address. His comments set the stage for the conference presentations that followed, and focused heavily on the seemingly unsolvable power/cooling conundrum. Belady provided a “3-D” view of the problem-examining its drivers, dynamics, and the direction in which it ultimately will lead the data center industry.

Costs down, consumption up

The cost of computation is falling dramatically, Belady stated, and is a primary factor in today’s data center environment. “Computation is a resource,” he commented. “Imagine gasoline at a price of one cent per gallon. How much would you consume?” The analogy applies to the data center, he says, where that low cost of computation is allowing consumers to purchase and use servers more cost-effectively than ever before.

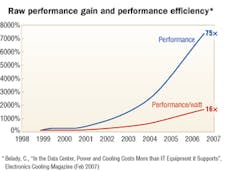

While the cost of computation falls, the footprint in which that computation takes place is also shrinking. Belady pointed out that, on the whole, the computing industry has realized an amazing 75x performance gain in form factor since 1999. Stripping that figure down to an oversimplified example, the space required for a single process in 1999 is enough for 75 such processes to take place today. The blade server, regarded by many as the building block of today’s high-density data center, probably best epitomizes that low-footprint-computing capability.

On their face, these figures appear to be good news for consumers of computational processing (i.e., data center users), but Belady pointed out another statistic that gets to the core of the density/heat issue. While form-factor performance gains of 75x have been realized since 1999, power-efficiency gains, in the form of performance/watt, have increased at a far lower 16x rate. That means the wattage used-and heat generated-in a given processing footprint today far exceeds that of eight years ago.

This processing/footprint/wattage dynamic has begotten the vexing problem of heat generation in high-density data centers. Data center managers are taking several measures to battle, or at least deal with, heat, including industry-standard hot-aisle/cold-aisle layouts and the use of air-conditioning systems. Even when used judiciously and with layouts designed to maximize their efficiency, air-conditioning systems consume significant amounts of power-a resource that, more like gasoline than like computation, can be in short supply and come at a staggering price.

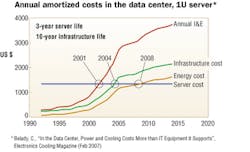

Staying on the theme of costs, Belady noted that when looking at total cost of data-center ownership, infrastructure and energy costs have exceeded information-technology (server) costs for years. The term “infrastructure” includes air-conditioning equipment, including chillers; Belady used the cost of a 1U server for comparative purposes. 2001, he said, was the crossover point when the combination of infrastructure and energy costs exceeded those of IT. In 2004, he added, infrastructure costs alone outpaced those of servers. Next year is when he expects energy by itself to outweigh servers as an annual amortized cost in the data center.

Measuring by metrics

What can be done to solve this situation? Belady contends the answer can be found, to some extent, in metrics: “The most important thing for us as an industry to do is find a way to measure data-center efficiency.”

Data centers are powerful, energy-consuming, and critical to business activity. Yet, Belady says, by their very nature, many of them are not running with a great deal of efficiency.

Today’s data center, he stated in his presentation, can be compared to a car with a V8 engine and the best spark plugs, but running out of tune, with only one piston firing and no air in the tires. Inefficiency is the commonality between them, as Belady detailed. Application growth, he explained, is far exceeding server-performance growth; as a result, utilization in servers is generally less than 20%.

So then, do these servers consume full power only when they are in full use, or do they also consume full power for the 80% of the time when they are essentially unutilized? That is a critical question-and one that is not fully answered-in light of the fact that many data centers run out of power before they run out of real estate.

Establishing metrics by which data-center managers can, in fact, measure these servers’ efficiency is paramount, and the data center industry is headed toward establishing such metrics, Belady maintains. He referred to research and papers addressing efficiency in the overall data center, in cooling, and in servers, citing The Uptime Institute’s (www.upsite.com) Fault Tolerant Specification as the de facto standard. Belady also mentioned that the American Society of Heating, Refrigeration, and Air-conditioning Engineers (ASHRAE; www.ashrae.org) has published books in each of the past four years, addressing cooling in data centers.

Belady also emphasized the work done by The Green Grid (www.thegreengrid.org) on the topic of server efficiency. The Green Grid is a consortium of IT companies (including HP, which counts one of its employees among the group’s board of directors) whose collective goal is to improve energy efficiency in data centers. Chatsworth Products Inc. (www.chatsworth.com) and Panduit Corp. (www.panduit.com), highly recognizable system providers in the cabling industry, are also members of The Green Grid.

Among the group’s objectives, and a theme Belady heavily emphasized in his Data Center World address, is the creation of metrics to provide numerical accountability for a data center’s efficiency. A key measurement-perhaps the key measurement-in his eyes is power usage effectiveness (PUE), which is the ratio of total facility power to IT equipment power. The lower the ratio of total power to IT power, the better. “Those who do this well [achieving a low ratio] will win,” Belady stated plainly.

Sweet spot moving?

A currently emerging practice that is helping efficiency efforts is what Belady referred to as “closely coupled cooling,” which is when a cooling source is placed close to a heat-generating rack. Currently, the “sweet spot” is to place the computer room air conditioner (CRAC) close to the heat source in a setup he calls “CRAC by the rack.” But the industry is headed toward liquid-cooled racks, he claims.

Several providers of liquid-cooled enclosures have presented information to the data-center marketplace and the cabling industry, through publications such as this one as well as at industry conferences. To date the marketplace has been slow to embrace the technology. Still, Belady sees it as the future for data-center cooling efforts.

Overall, Belady’s address emphasized the importance of driving down total cost of ownership of what he described as the complete data center ecosystem-including IT equipment, cooling infrastructure, and power.

He closed by saying that the effectiveness with which data center managers navigate and integrate their industry’s trends will determine cost of ownership and, ultimately, their collective returns on their investments in data centers.PATRICK McLAUGHLIN is chief editor of Cabling Installation & Maintenance.

Seen and heard at Data Center World

To help network cabling managers seamlessly leverage existing copper electronics with high-density fiber-optics, Transition Networks (www.transition.com) Gigabit Ethernet media conversion technology has been added to Corning Cable Systems’ (www.corningcablesystems.com) portfolio of Plug & Play Universal System products.

The media conversion module features 12 RJ-45 copper ports on the front and two 12-fiber MTP connector ports at the rear. Up to two modules can be placed in the 1U Media Converter Housing, offering 24-copper-port density, and up to eight modules can be placed into the 4U housing for 96-copper-port density.

“Large data centers have a continuous need to upgrade their networks to ensure the effective transport and quality of high volumes of data,” says Bill Schultz, vice president of marketing at Transition Networks. “Corning leads the market and is offering a portfolio of solutions that feature high-density ribbon cabling and MTP connector-based trunking for space-saving and convenient fiber deployment. The addition of our media conversion technology to the Plug & Play Universal Systems line offers more versatility to help organizations optimize the connectivity infrastructure in their data centers.”

The addition of the media converter module to Corning’s product portfolio “allows customers to gain all the benefits of a pre-terminated, modular solution, while using the low-cost legacy copper electronics that are available today,” says Doug Winders, vice president of sales and marketing for Corning Cable Systems Private Networks.

Power, cooling and management solutions provider American Power Conversion (APC; www.apcc.com) is teaming with IBM (www.ibm.com) to provide a new global service product offered by IBM’s site and facilities services unit.

The Scalable Modular Data Center is designed for rapidly deploying a pre-engineered 500- or 1,000-square-foot data center. IBM will offer its customers pre-configured American Power Conversion InfraStruXure solutions, including perimeter or InRow cooling, and NetBotz physical threat monitoring. InfraStruXure integrates power, cooling, racks, management, security and environmental solutions. The Scalable Modular Data Center will utilize IBM’s resources for assessments, project management integration, and ongoing solutions-related maintenance contracts.

“From our experience in marketing products to small- and medium-sized businesses, we designed this solution specifically to meet the customer’s need for flexible infrastructure that can install in virtually any environment, and still support the cost-effective addition of components as a business grows,” says Rob Johnson, APC’s president and chief executive officer.

(APC has also unveiled plans to deliver enterprise software solutions for managing the IT infrastructure.)