Choosing the best media for fiber-in-the-horizontal

More end-users are deciding to install fiber in their horizontal networks, directly to desktops or even to wireless access points. In many cases, these fiber installations are driven by the need for higher bandwidth, both initially and during the life of the cabling system.

Once the end user has elected to install fiber-in-the-horizontal, key questions arise:

- What do the standards specify for optical applications that may be used in the horizontal?

- How do you decide what kind of fiber should be used?

- Should it be singlemode, multimode, 50-, or 62.5-µm fiber?

- What level of bandwidth support is required to sustain today's applications and those into the future?

- How should channel insertion loss be managed to assure reliable support for these applications?

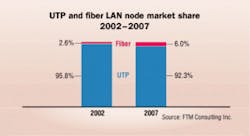

Fiber-in-the-horizontal is a relatively small but growing application space. For example, the percentage of LAN nodes that are fiber-based will double by 2007, and the LAN cabling market for fiber cable is expected to grow at more than twice the rate of UTP cable. This growth is being driven in large part by the need for Gigabit Ethernet transmission rates and increased deployments of fiber-to-the-desk, fiber to wireless access points, and Storage Area Networks (SANs).

New drivers

The traditional reasons for using fiber in LANs and SANs are security, higher bandwidth, longer distances, lower bit error rates (BER), easier testing, and stronger cable pull ratings. One new driver may be a "future-proof" upgrade path to 10-Gbit/sec data rates. While some available fibers can easily support 10-Gbits/sec to 500 meters, there is no UTP cable specified to support 10-Gbit/sec applications by any application standards body.

The Institute of Electrical and Electronics Engineers (IEEE) is studying the possibility of writing a draft standard for 10-Gbit/sec over 100 meters of UTP. This 10GBase-T proposal could be published as a standard by 2006; however, if published, this standard will require a new, higher bandwidth UTP cable. So, for new installations, end users might face a dilemma. Should you install today's UTP for 1-Gbit/sec applications, then install new UTP or fiber for 10-Gbit/sec applications? Or should you install fiber to run both 1- and 10-Gbit/sec applications with no cabling system changes in the future?

Another driver can be cost. Fiber's 300 meter-plus reach enables it to support a centralized architecture, which has one network electronics location in the basement connected to each user. UTP has a limited 100 meter reach and so requires electronics both in the basement and in the telecommunications closet on each floor to serve end users. If an end user installs fiber-to-the-desk using a centralized system, the savings in telecommunications closet space can result in a lower cost system than with UTP.

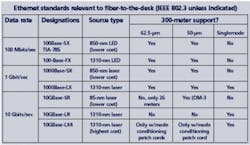

If this is what the end user choses, the first step should be to review the applicable standards. The ANSI TIA/EIA-568-B.1/B.3 and ISO-11801 2nd Edition standards both specify a centralized cabling architecture of up to 300 meters, which can use 50-µm, 62.5-µm, or singlemode fiber. Included in these standards is 850 nm laser-optimized 50-µm fiber (850 LO 50), as it is called in the TIA documents, and the same fiber is specified as OM-3 in the ISO-11801 standard. In terms of application standards for LAN fiber-to-the-desk, Ethernet dominates. The IEEE 802.3 and TIA/EIA-785 standards for Ethernet and supported media types for 300-meter applications are summarized in the table.

The next decision is to determine what type of fiber to deploy—singlemode or multimode. While singlemode fiber is ideal for applications of up to hundreds of kilometers, it requires much more expensive laser transceivers and connectors than multimode fiber. The key parameter for the decision should be the optical system cost, which is the cost of the optical transceivers, cabling system components and installation. The cost for multimode fiber-based optical systems is typically 25 to 50% lower than for singlemode. This cost delta is expected to continue due to the easier optical tolerances and lower cost 850-nm lasers enabled by the multimode fiber system.

Deciding on multimodes

The next decision is to determine which type of multimode fiber to use. There are two primary types, 50-µm and 62.5-µm, and each is described by the diameter of its light-carrying core. The 62.5-µm fiber was deployed in the 1980s due to its superior optical coupling and distance with 10-Mbit/sec LEDs. For today's high-speed laser based applications, 50-µm fiber has up to 10 times the bandwidth and distance capability of 62.5-µm fiber.

The 850 LO 50-µm fibers, such as LaserWave 300, can support 100 Mbits/sec through 10 Gbits/sec up to a distance of 300 meters, allowing an end user to install a 100-Mbit/sec system today, and cost-effectively migrate to 1- and then 10-Gbit/sec in the future. For those few thinking that greater than 10-Gbit/sec may be required, 850 LO 50-µm fiber can also support the proposed 40-Gbit/sec speed for Ethernet to 300 meters using 850-nm vertical-cavity surface-emitting lasers (VCSELs).

The choice of fiber type ultimately depends on upgrade possibilities. If no upgrade to 10-Gbit/sec is envisioned, you could use a standard 50-µm fiber having a 500 MHz-km bandwidth at 850 nm to support 100 Mbit/sec and 1 Gbit/sec up to a distance of 300 meters. But since most end users installing fiber-to-the-desk typically operate bandwidth-intensive networks, an upgrade to 10-Gbit/sec is likely within the 10 to 20 year life of the cabling system. So, the 850-nm LO 50-µm fiber is the most cost-effective for protecting the investment well into the future.

Let's assume that a designer has selected 850-nm LO 50-µm fiber for a fiber-to-the-desk network. To support 10 Gbit/sec at 300 meters, this fiber is specified by the standards to provide 2000 MHz-km of effective modal bandwidth at 850 nm. According to both the TIA and ISO standards, this 2000 MHz-km effective modal bandwidth shall be assured by a measurement known as High Resolution Differential Mode Delay (HR-DMD). HR-DMD is specified in TIA-FOTP-220 and is a precise measurement of the worst-case pulse spreading that can occur in a 10-gigabit multimode fiber system. Lower pulse spreading translates into higher bandwidth, so the goal is to minimize DMD. To ensure the 2000 MHz-km effective modal bandwidth, the TIA and ISO standards specify the maximum DMD allowed to limit the pulse spreading at each point across the core.

These DMD specifications are tightest within a doughnut-shaped area outside of the core center, and provide the required 2000 MHz-km bandwidth when used with standards-compliant 10-Gbit/sec 850-nm lasers. But even standards-compliant 10-Gbit/sec lasers can transmit up to 30% of their power into the center of the core. While the standards allow for a relaxed specification in the center 10 microns of the core, higher performance and reliability margins can be achieved by using a fiber with a tighter central DMD specification.

Channel insertion loss

The next fiber consideration is managing the cabling system's channel insertion loss, which is the total optical loss from end-to-end of an optical link—the cable plus connections and splices. Gigabit, 10-Gigabit Ethernet and Fibre Channel applications have tight channel insertion loss budgets of only about 2.5 dB. Four connections are required for a TIA-compliant fiber-to-the-desk link having a crossconnect in the equipment room, an interconnect in the telecommunications closet, and connection at the desktop outlet. In a 300-meter link, about 1 dB will be consumed by the cable, leaving only 1.5 dB for the connections, and less than 0.4 dB per connection for the four connections. One way to minimize connection loss is to use a fiber with tight dimensional specifications.

Since the primary cause of connection loss is the offset of the two mated fiber cores, the two key specifications are core to cladding offset and cladding diameter. Superior specifications for these two key dimensions can reduce worst-case connection loss by up to 0.5 dB per connection. In our four-connection example, 2 dB of connection loss in addition to the 1.5 dB worst-case typical of four good quality connections could reduce the maximum 10-Gigabit Ethernet reach by up to 60 meters.

At the core

The best fiber choice for most fiber-to-the-desk installations boils down to two scenarios. In one, there are those who never anticipate a need for 10-Gbit/sec applications and use standard or laser certified 50-µm fiber with a 500 MHz-km bandwidth specification—the most cost-effective 100-Mbits/sec through 1-Gbit/sec system up to the 300 meters specified for centralized cabling. In the second scenario, there is the possibility of a 10-Gbit/sec upgrade over the lifetime of the cabling system.

In either case, a fiber with superior core location tolerances should be specified to minimize channel insertion loss, and maximize system reach and reliability.

John George is fiber offer development manager of systems engineering and marketing for OFS Fitel, LLC (www.ofsoptics.com).